Torproject Sysadmin Team

The Torproject System Administration Team is the team that keeps

torproject.org's infrastructure going. This is the internal team

wiki. It has mostly documentation mainly targeted for the team

members, but also has useful information for people with

torproject.org accounts.

The documentation is split into the following sections:

- Introduction to the team, what it does, key services and policies

- Support - in case of fire, press this button

- User documentation - aimed primarily at non-technical users and the general public

- Sysadmin how-to's - procedures specifically written for sysadmins

- Service list - service list and documentation

- Machine list - the full list of machines managed by TPA (in LDAP)

- Policies - major decisions and how they are made

- Providers - info about service and infrastructure providers

- Meetings - minutes from our formal meetings

- Roadmaps - documents our plans for the future (and past successes of course)

Our source code is all hosted on GitLab.

This is a wiki. We welcome changes to the content! If you have the right permissions -- which is actually unlikely, unfortunately -- you can edit the wiki in GitLab directly. Otherwise you can submit a pull request on the wiki replica. You can also clone the git repository and send us a patch by email.

To implement a similar merge request workflow on your GitLab wiki, see TPA's documentation about Accepting merge requests on wikis.

This documentation is primarily aimed at users.

Note: most of this documentation is a little chaotic and needs to be merged with the service listing. You might interested in one of the following quick links instead:

Other documentation:

- accounts

- admins

- bits-and-pieces

- extra

- hardware-requirements

- lektor-dev-macos

- naming-scheme

- reporting-email-problems

- services

- ssh-jump-host

- static-sites

- svn-accounts

- torproject.org Accounts

Note that this documentation needs work, as it overlaps with user creation procedures, see issue 40129.

torproject.org Accounts

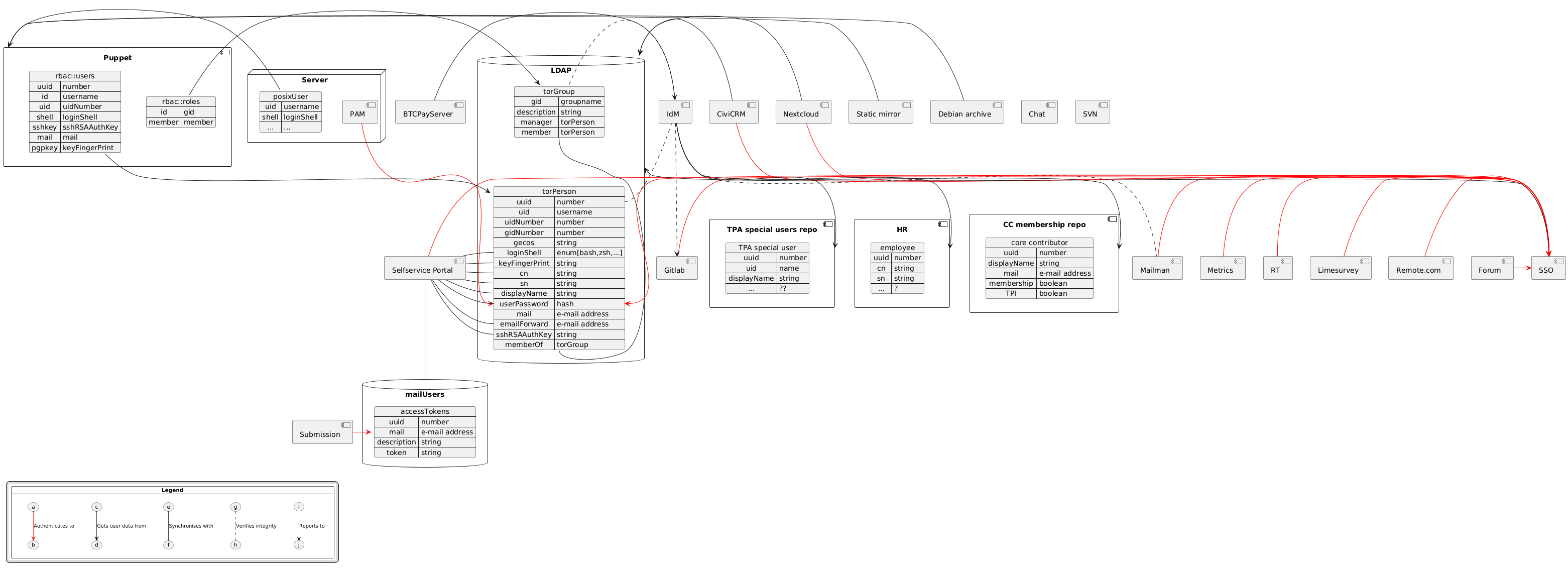

The Tor project keeps all user information in a central LDAP database which governs access to shell accounts, git (write) access and lets users configure their email forwards.

It also stores group memberships which in turn affects which users can log into which hosts.

This document should be consistent with the Tor membership policy, in case of discrepancy between the two documents, the membership policy overrules this document.

Decision tree: LDAP account or email alias?

Here is a simple decision tree to help you decide if a new contributor needs an LDAP account, or if an email alias will do. (All things being equal, it's better to set people up with only an email alias if that's all they need, since it reduces surface area which is better for security.)

LDAP account reasons

Regardless of whether they are a Core Contributor:

- Are they a maintainer for one of our official software projects, meaning

they need to push commits (write) to one of our git repos?

- They should get an LDAP account.

- Do they need to access (read) a private git repo, like "dirauth-conf"?

- They should get an LDAP account.

Are they a Core Contributor?

- Do they want to make their own personal clones of our git repos, for example to propose patches and changes?

- They don't need an LDAP account for just this case anymore, since gitlab can host git repos. (They are also welcome to put their personal git repos on external sites if they prefer.)

- Do they need to log in to our servers to use our shared irc host?

- They should get an LDAP account.

- If they're not a Core Contributor, they should put their IRC somewhere else, like pastly's server.

- Do they need to log in to our servers to maintain one of our websites or

services?

- An existing Core Contributor should request an LDAP account.

- If they're not a Core Contributor, but they are a staff member who needs to maintain services, then Tor Project Inc should request an LDAP account.

- If they are not a staff member, then an existing Core Contributor should request an LDAP account, and explain why they need access.

- Do they need to be able to send email using an @torproject.org address?

- In our 2022/2023 process of locking down email, it's increasingly necessary for people to have a full ldap account in order to deliver their tor mail to the internet properly.

See New LDAP accounts for details.

Email alias reasons

If none of the above cases apply:

- Are they a Core Contributor?

- An existing Core Contributor should request an email alias.

- Are they a staff member?

- Tor Project Inc should request an email alias.

See Changing email aliases for details.

New LDAP accounts

New accounts have to be sponsored by somebody who already has a torproject.org account. If you need an account created, please find somebody in the project who you are working with and ask them to request an account for you.

Step 1

The sponsor will collect all required information:

- name,

- initial forwarding email address (the user can change that themselves later),

- OpenPGP key fingerprint,

- desired username.

The sponsor is responsible for verifying the information's accuracy, in particular establishing some confidence that the key in question actually belongs to the person that they want to have access.

The user's OpenPGP key should be available from the public keyserver network.

The sponsor will create a ticket in GitLab:

- The ticket should include a short rationale as to why the account is required,

- contain all the pieces of information listed above, and

- should be OpenPGP signed by the sponsor using the OpenPGP key we have on

file for them. Please enclose the OpenPGP clearsigned blob using

{{{and}}}.

username policy

Usernames are allocated on a first-come, first-served basis. Usernames

should be checked for conflict with commonly used administrative

aliases (root, abuse, ...) or abusive names (killall*, ...). In

particular, the following have special meaning for various services

and should be avoided:

root

abuse

arin-admin

certmaster

domainadmin

hostmaster

mailer-daemon

postmaster

security

webmaster

That list, taken from the leap project is not exhaustive and your own judgement should be used to spot possibly problematic aliases. See also those other possible lists:

Step n+1

Once the request has been filed it will be reviewed by Roger or Nick and either approved or rejected.

If the board indicates their assent, the sysadmin team will then create the account as requested.

Retiring accounts

If you won't be using your LDAP account for a while, it's good security hygiene to have it disabled. Disabling an LDAP account is a simple operation, and reenabling an account is also simple, so we shouldn't be shy about disabling accounts when people stop needing them.

To simplify the review process for disable requests, and because disabling by mistake has less impact than creating a new LDAP account by mistake, the policy here is "any two of {Roger, Nick, Shari, Isabela, Erin, Damian} are sufficient to confirm a disable request."

(When we disable an LDAP account, we should be sure to either realize and accept that email forwarding for the person will stop working too, or add a new line in the email alias so email keeps working.)

Getting added to an existing group/Getting access to a specific host

Almost all privileges in our infrastructure, such as account on a particular host, sudo access to a role account, or write permissions to a specific directory, come from group memberships.

To know which group has access to an specific host, FIXME.

To get added to some unix group, it has to be requested by a member of that group. This member has to create a new ticket in GitLab, OpenPGP-signed (as above in the new account creation section), requesting who to add to the group.

If a new group needs to be created, FIXME.

The reasons why a new group might need to be created are: FIXME.

Should the group be orphaned or have no remaining active members, the same set of people who can approve new account requests can request you be added.

To find out who is on a specific group you can ssh to perdulce:

ssh perdulce.torproject.org

Then you can run:

getent group

See also: the "Host specific passwords" section below

Changing email aliases

Create a ticket specifying the alias, the new address to add, and a brief motivation for the change.

For specifics, see the "The sponsor will create a ticket" section above.

Adding a new email alias

Personal Email Aliases

Tor Project Inc can request new email aliases for staff.

An existing Core Contributor can request new email aliases for new Core Contributors.

Group Email Aliases

Tor Project Inc and Core Contributors can request group email aliases for new functions or projects.

Getting added to an existing email alias

Similar to being added to an LDAP group, the right way to get added to an existing email alias is by getting somebody who is already on that alias to file a ticket asking for you to be added.

Changing/Resetting your passwords

LDAP

If you've lost your LDAP password, you can request that a new one be generated. This is done by sending the phrase "Please change my Debian password" to chpasswd@db.torproject.org. The phrase is required to prevent the daemon from triggering on arbitrary signed email. The best way to invoke this feature is with

echo "Please change my Debian password" | gpg --armor --sign | mail chpasswd@db.torproject.org

After validating the request the daemon will generate a new random password,

set it in the directory and respond with an encrypted message containing the

new password. This new password can then be used to

login (click the "Update my info"

button), and use the "Change password" fields to create a new LDAP

password.

Note that LDAP (and sudo passwords, below) changes are not instantaneous: they can take between 5 to 8 minutes to propagate to any given host.

More specifically, the password files are generated on the master LDAP server every five minutes, starting at the third minute of the hour, with a cron schedule like this:

3,8,13,18,23,28,33,38,43,48,53,58

Then those files are synchronized on a more standard 5 minutes schedule to all hosts.

There are also delays involved in the mail loop, of course.

Host specific passwords / sudo passwords

Your LDAP password can not be used to authenticate to sudo on

servers. It can only allow to log you in through SSH, but you need a

different password to get sudo access, which we call the "sudo

password".

To set the sudo password:

- go to the user management website

- pick "Update my info"

- set a new (strong) sudo password

If you want, you can set a password that works for all the hosts that are managed by torproject-admin, by using the "wildcard ("*"). Alternatively, or additionally, you can have per-host sudo passwords -- just select the appropriate host in the pull-down box.

Once set on the web interface, you will have to confirm the new settings by sending a signed challenge to the mail interface. The challenge is a single line, without line breaks, provided by the web interface. With the challenge first you will need to produce an openpgp signature:

echo 'confirm sudopassword ...' | gpg --armor --sign

With it you can compose an email to changes@db.torproject.org, sending the challenge in the body followed by the openpgp signature.

Note that setting a sudo password will only enable you to use sudo to configured accounts on configured hosts. Consult the output of "sudo -l" if you don't know what you may do. (If you don't know, chances are you don't need to nor can use sudo.)

Do mind the delays in LDAP and sudo passwords change, mentioned in the previous section.

Changing/Updating your OpenPGP key

If you are planning on migrating to a new OpenPGP key and you also want to change your key in LDAP, or if you just want to update the copy of your key we have on file, you need to create a new ticket in GitLab:

- The ticket should include your username, your old OpenPGP fingerprint and your new OpenPGP fingerprint (if you're changing keys).

- The ticket should be OpenPGP signed with your OpenPGP key that is currently stored in LDAP.

Revoked or lost old key

If you already revoked or lost your old OpenPGP key and you migrated to a new one before updating LDAP, you need to find a sponsor to create a ticket for you. The sponsor should create a new ticket in GitLab:

- The ticket should include your username, your old OpenPGP fingerprint and your new OpenPGP fingerprint.

- Your OpenPGP key needs to be on a public keyserver and be signed by at least one Tor person other than your sponsor.

- The ticket should be OpenPGP signed with the current valid OpenPGP key of your sponsor.

Actually updating the keyring

See the new-user HOWTO.

Moved to policy/tpa-rfc-2-support.

Bits and pieces of Tor Project infrastructure information

A collection of information looking for a better place, perhaps after being expanded a bit to deserve their own page.

Backups

- We use Bacula to make backups, with one host running a director (currently bacula-director-01.tpo) and another host for storage (currently brulloi.tpo).

- There are

BASEfiles andWALfiles, the latter for incremental backups. - The logs found in

/var/log/bacula-main.logand/var/log/bacula/seem mostly empty, just like the systemd journals.

Servers

-

There's one

directorand onestorage node. -

The director runs

/usr/local/sbin/dsa-bacula-schedulerwhich reads/etc/bacula/dsa-clientsfor a list of clients to back up. This file is populated by puppet (puppetdb$bacula::tag_bacula_dsa_client_list) and will list clients until they're being deactivated in puppet.

Clients

tor-puppet/modules/bacula/manifests/client.ppgives an idea of where things are at on backup clients.- Clients run the Bacula File Daemon,

bacula-fd(8).

Onion sites

-

Example from a vhost template

<% if scope.function_onion_global_service_hostname(['crm-2018.torproject.org']) -%> <Virtualhost *:80> ServerName <%= scope.function_onion_global_service_hostname(['crm-2018.torproject.org']) %> Use vhost-inner-crm-2018.torproject.org </VirtualHost> <% end -%> -

Function defined in

tor-puppet/modules/puppetmaster/lib/puppet/parser/functions/onion_global_service_hostname.rbparses/srv/puppet.torproject.org/puppet-facts/onionbalance-services.yaml. -

onionbalance-services.yamlis populated throughonion::balance(tor-puppet/modules/onion/manifests/balance.pp) -

onion:balanceuses theonion_balance_service_hostnamefact fromtor-puppet/modules/torproject_org/lib/facter/onion-services.rb

Puppet

See service/puppet.

Extra is one of the sites hosted by "the www rotation". The www rotation uses several computers to host its websites and it is used within tpo for redundancy.

Extra is used to host images that can be linked in blog posts and the like. The idea is that you do not need to link images from your own computer or people.tpo.

Extra is used like other static sites within tpo. Learn how to write to extra

So you want to give us hardware? Great! Here's what we need...

Physical hardware requirements

If you want to donate hardware, there are specific requirements for machine we manage that you should follow. For other donations, please see the donation site.

This list is not final, and if you have questions, please contact us. Also note that we also accept virtual machine "donations" now, for which requirements are different, see below.

Must have

- Out of band management with dedicated network port, preferably a something standard (like serial-over-ssh, with BIOS redirection), or failing that, serial console and networked power bars

- No human intervention to power on or reboot

- Warranty or post-warranty hardware support, preferably provided by the sponsor

- Under the 'ownership' of Tor, although long-term loans can also work

- Rescue system (PXE bootable OS or remotely loadable ISO image)

Nice to have

- Production quality rather than pre-production hardware

- Support for multiple drives (so we can do RAID) although this can be waived for disposable servers like build boxes

- Hosting for the machine: we do not run our own data centers or rack, so it would be preferable if you can also find a hosting location for the machine, see the hosting requirements below for details

To avoid

- proprietary Java/ActiveX remote consoles

- hardware RAID, unless supported with open drivers in the mainline Linux kernel and userland utilities

Hosting requirements

Those are requirements that apply to actual physical / virtual hosting of machines.

Must have

- 100-400W per unit density, depending on workload

- 1-10gbit, unmetered

- dual stack (IPv4 and IPv6)

- IPv4 address space (at least one per unit, typically 4-8 per unit)

- out of band access (IPMI or serial)

- rescue systems (e.g. PXE booting)

- remote hands SLA ("how long to replace a broken hard drive?")

Nice to have

- "clean" IP addresses (for mail delivery, etc)

- complete /24 IPv4, donated to the Tor project

- private VLANs with local network

- BGP announcement capabilities

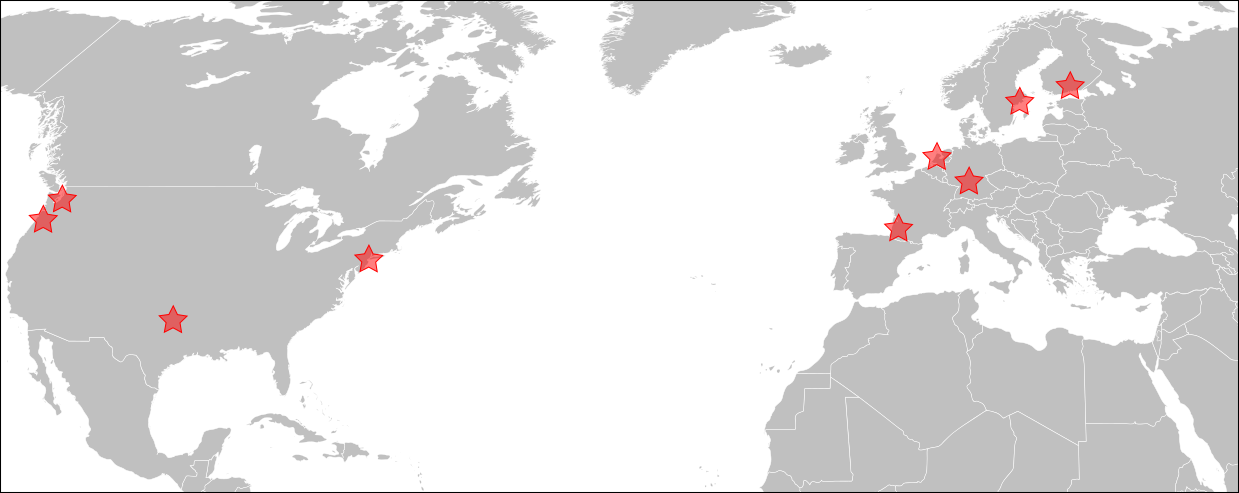

- not in europe or northern america

- free, or ~ 150$/unit

Virtual machines requirements

Must have

Without those, we will have to be basically convinced to accept those machines:

- Debian OS

- Shell access (over SSH)

- Unattended reboots or upgrades

The latter might require more explanations. It means the machine can be rebooted without intervention of an operator. It seems trivial, but some setups make that difficult. This is essential so that we can apply Linux kernel upgrades. Alternatively, manual reboots are acceptable if such security upgrades are automatically applied.

Nice to have

Those we would have in an ideal world, but are not deal breakers:

- Full disk encryption

- Rescue OS boot to install our own OS

- Remote console

- Provisioning API (cloud-init, OpenStack, etc)

- Reverse DNS

- Real IP address (no NAT)

To avoid

Those are basically deal breakers, but we have been known to accept those situations as well, in extreme cases:

- No control over the running kernel

- Proprietary drivers

Overview

The aim of this document is to explain the steps required to set up a local Lektor development environment suitable for working on Tor Project websites based on the Lektor platform.

We'll be using the Sourcetree git GUI to provide a user-friendly method of working with the various website's git repositories.

Prerequisites

First we'll install a few prerequisite packages, including Sourcetree.

You must have administrator privileges to install these software packages.

First we'll install the Xcode package.

Open the Terminal app and enter:

xcode-select --install

Click Install on the dialog that appears.

Now, we'll install the brew package manager, again via the Terminal:

/bin/bash -c "$(curl -fsSL

https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

Now we're ready to install a few more tools:

brew install coreutils git git-lfs python3.8

And lastly we need to download and install Sourcetree. This can be done from the app's website: https://www.sourcetreeapp.com/

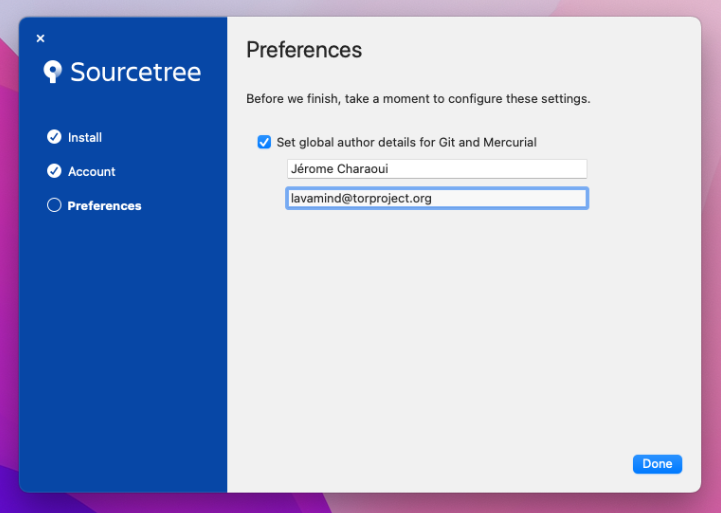

Follow the installer prompts, entering name and email address so that the git commits are created with adequate identifying information.

Connect GitLab account

This step is only required if you want to create Merge Requests in GitLab.

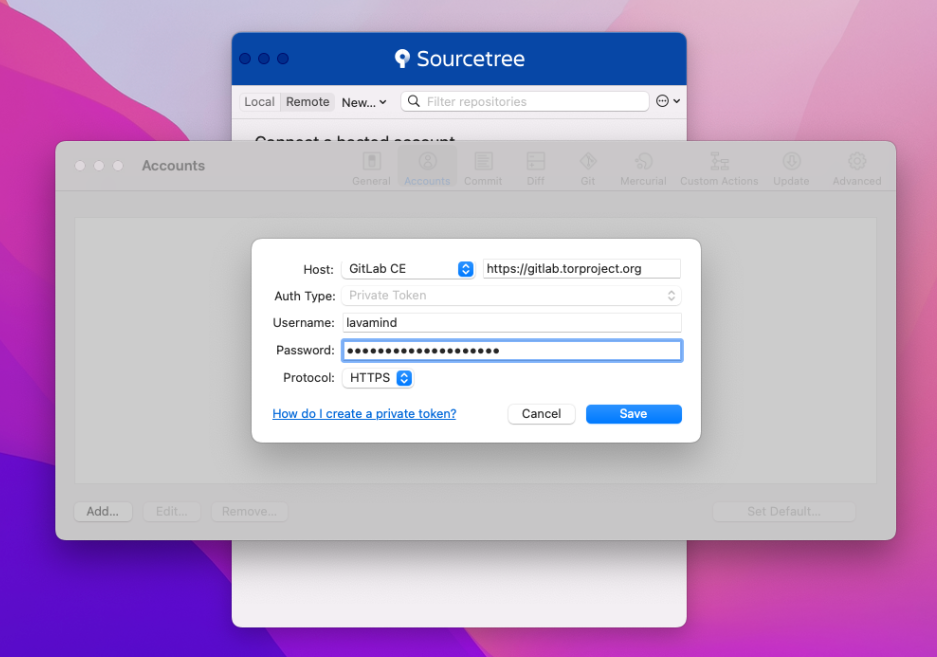

Next, we'll create a GitLab token to allow Sourcetree to retrieve and update projects.

- Navigate to https://gitlab.torproject.org/-/profile/personal_access_tokens

- Enter

sourcetreeunder Token name - Choose an expiration date, ideally not more than a few months

- Check the box next to

api - Click Create personal access token

- Copy the token into your clipboard

Now, open Sourcetree and click the Connect... button on the main windows, then Add..., and fill in the dialog as below. Paste the token in the Password field.

Click the Save button.

The Remote tab on the main window should now show a list of git repositories available on the Tor Project GitLab.

To clone a project, enter its name (eg. tpo or blog) in the Filter

repositories input box and click the Clone link next to it.

Depending on the project, a dialog titled Git LFS: install required may then appear. If so, click Yes to ensure all the files in the project are downloaded from GitLab.

Page moved to TPA-RFC-6: Naming Convention.

Email delivery problems are unfortunately quite common but there are often simple solutions to the problems once we know exactly what is going on.

When reporting delivery problems on Email infrastructure, make sure you include at least the following information in your report:

- originating email address (e.g.

Alice <alice@torproject.org>) - destination email address (e.g.

Bob <bob@torproject.org>) - date and time the email was sent, with timezone, to the second

(e.g.

2019-06-03 13:52:30 +0400) - how the email was sent (e.g. from my laptop, over SMTP+TLS to my

email provider,

riseup.net) - what error did you get (e.g. a bounce, message not delivered)

Ideally, if you can, provide us with the Message-ID header, if you

know what that is and can find it. Otherwise, don't worry about it and

provide us with the above details.

If you do get a bounced message, do include the entire bounce, with headers. The simplest way to do so is forward it as an attachment or "view source" and copy-paste it somewhere safe (like https://share.riseup.net/).

Ideally, also include a copy of the original message in your report, also with full headers.

If you can't send a copy of the original message for privacy reasons, at least include the headers of the email.

Send us the message using the regular methods, as appropriate, see the support guide for details.

Service on TPO machines are often run as regular users, from normal

sessions, instead of the usual /etc/init.d or systemd

configuration provided by Debian packages. This is part of our

service vs system admin distinction.

This page aims at documenting how such services are started and

managed. There are many ways this can be done: many services have been

started as a @reboot cronjob in the past, but we're looking at using

systemd --user as a more reasonable way to do this in the future.

systemd startup

Most Debian machines now run systemd which allows all sorts of

neat tricks. In particular, it allows us to start programs as a normal

user through a systemd --user session that gets started

automatically at boot.

Adding a new service

User-level services are deployed in ~/.config/systemd/user/. Let's

say we're deploying a service called $SERVICE. You'd need to craft a

.service file and drop it in

~/.config/systemd/user/$SERVICE.service:

[Unit]

Description=Run a program forever that does not fork

[Service]

Type=simple

ExecStart=/home/role/bin/service start

[Install]

WantedBy=default.target

Then you can run:

systemctl --user daemon-reload

For the new file to be notified.

If you're getting an error like this:

Failed to connect to bus: No such file or directory

It's because your environment is not setup correctly and systemctl

can't find the correct sockets. Try to set the XDG_RUNTIME_DIR

environment to the right user directory:

export XDG_RUNTIME_DIR=/run/user/$(id -u)

Then the service can be enabled:

systemctl --user enable $SERVICE

And then started:

systemctl --user start $SERVICE

sysadmin stuff

On the sysadmin side, to enable systemd --user session, we need to

run loginctl enable-linger $USER. For example, this will enable the

session for the user $USER:

loginctl_user { $USER: linger => enabled }

This will create an empty file for the user in

/var/lib/systemd/linger/ but it will also start the systemd --user session immediately, which can already be used to start other

processes.

cron startup

This method is now discouraged, but is still in use for older services.

Failing systemd or admin support, you might be able to start

services at boot time with a cron job.

The trick is to edit the role account crontab with sudo -u role crontab -e and then adding a line like:

@reboot /home/role/bin/service start

It is deprecated because cron is not a service manager and has no

way to restart the service easily on upgrades. It also lacks features

like socket activation or restart on failure that systemd

provides. Plus it won't actually start the service until the machine

is rebooted, that's just plain silly.

The correct way to start the above service is to use the .service

file documented in the previous section.

You need to use an ssh jump host to access internal machines at tpo.

If you have a recent enough ssh (>= 2016 or so), then you can use the ProxyJump directive. Else, use ProxyCommand.

ProxyCommand automatically executes the ssh command on the host to jump to the next host and forward all traffic through.

With recent ssh versions:

Host *.torproject.org !ssh.torproject.org !people.torproject.org !gitlab.torproject.org

ProxyJump ssh.torproject.org

Or with old ssh versions (before OpenSSH 7.3, or Debian 10 "buster"):

Host *.torproject.org !ssh.torproject.org !people.torproject.org !gitlab.torproject.org

ProxyCommand ssh -l %r -W %h:%p ssh.torproject.org

Note that there are multiple ssh-like aliases that you can use,

depending on your location (or the location of the target host). Right

now there are two:

ssh-dal.torproject.org- in Dallas, TX, USAssh-fsn.torproject.org- in Falkenstein, Saxony, Germany

The canonical list for this is searching for ssh in the purpose

field on the machines database.

Note: It is perfectly acceptable to run

pingagainst the server to determine the closest to your location, and you can also run ping from the server to a target server as well. The shortest path will be the one that has the lowest sum for those two, naturally.

This naming convention was announced in TPA-RFC-59.

Host authentication

It is also worth keeping the known_hosts file in sync to avoid

server authentication warnings. The server's public keys are also

available in DNS. So add this to your .ssh/config:

Host *.torproject.org

UserKnownHostsFile ~/.ssh/known_hosts.torproject.org

VerifyHostKeyDNS ask

And keep the ~/.ssh/known_hosts.torproject.org file up to date by

regularly pulling it from a TPO host, so that new hosts are

automatically added, for example:

rsync -ctvLP ssh.torproject.org:/etc/ssh/ssh_known_hosts ~/.ssh/known_hosts.torproject.org

Note: if you would prefer the above file to not contain the shorthand hostname

notation (i.e. alberti for alberti.torproject.org), you can get rid of those

with the following command after the file is on your computer:

sed -i 's/,[^,.: ]\+\([, ]\)/\1/g' .ssh/known_hosts.torproject.org

Different usernames

If your local username is different from your TPO username, also set

it in your .ssh/config:

Host *.torproject.org

User USERNAME

Root access

Members of TPA might have a different configuration to login as root by default, but keep their normal user for key services:

# interact as a normal user with Puppet, LDAP, jump and gitlab servers by default

Host puppet.torproject.org db.torproject.org ssh.people.torproject.org people.torproject.org gitlab.torproject.org

User USERNAME

Host *.torproject.org

User root

Note that git hosts are not strictly necessary as you should normally

specify a git@ user in your git remotes, but it's a good practice

nevertheless to catch those scenarios where that might have been

forgotten.

When not to use the jump host

If you're going to do a lot of batch operations on all hosts (for example with Cumin), you definitely want to add yourself to the adding yourself to the allow list so that you can skip using the jump host.

For this, anarcat uses a special trusted-network command that fails

unless the network is on that allow list. Therefore, the above jump

host exception list becomes:

# use jump host if the network is not in the trusted whitelist

Match host *.torproject.org, !host ssh.torproject.org, !host ssh-dal.torproject.org, !host ssh-fsn.torproject.org, !host people.torproject.org, !host gitlab.torproject.org, !exec trusted-network

ProxyJump anarcat@ssh-dal.torproject.org

The trusted-network command checks for the default gateway on

the local machine and checks if it matches an allow list. It could

also just poke at the internet to see "what is my IP address", like:

- https://check.torproject.org/

- https://wtfismyip.com/text

- https://ifconfig.me/ip

- https://ip.me/

- https://test.anarc.at/

Sample configuration

Here is a redacted copy of anarcat's ~/.ssh/config file:

Host *

# disable known_hosts hashing. it provides little security and

# raises the maintenance cost significantly because the file

# becomes inscrutable

HashKnownHosts no

# this defaults to yes in Debian

GSSAPIAuthentication no

# set a path for the multiplexing stuff, but do not enable it by

# default. this is so we can more easily control the socket later,

# for processes that *do* use it, for example git-annex uses this.

ControlPath ~/.ssh/control-%h-%p-%r

ControlMaster no

# ~C was disabled in newer OpenSSH to facilitate sandboxing, bypass

EnableEscapeCommandline yes

# taken from https://trac.torproject.org/projects/tor/wiki/doc/TorifyHOWTO/ssh

Host *-tor *.onion

# this is with netcat-openbsd

ProxyCommand nc -x 127.0.0.1:9050 -X 5 %h %p

# if anonymity is important (as opposed to just restrictions bypass), you also want this:

# VerifyHostKeyDNS no

# interact as a normal user with certain symbolic names for services (e.g. gitlab for push, people, irc bouncer, etc)

Host db.torproject.org git.torproject.org git-rw.torproject.org gitlab.torproject.org ircbouncer.torproject.org people.torproject.org puppet.torproject.org ssh.torproject.org ssh-dal.torproject.org ssh-fsn.torproject.org

User anarcat

# forward puppetdb for cumin by default

Host puppetdb-01.torproject.org

LocalForward 8080 127.0.0.1:8080

Host minio*.torproject.org

LocalForward 9090 127.0.0.1:9090

Host prometheus2.torproject.org

# Prometheus

LocalForward 9090 localhost:9090

# Prometheus Pushgateway

LocalForward 9091 localhost:9091

# Prometheus Alertmanager

LocalForward 9093 localhost:9093

# Node exporter is 9100, but likely running locally

# Prometheus blackbox exporter

LocalForward 9115 localhost:9115

Host dal-rescue-02.torproject.org

Port 4622

Host *.torproject.org

UserKnownHostsFile ~/.ssh/known_hosts.d/torproject.org

VerifyHostKeyDNS ask

User root

# use jump host if the network is not in the trusted whitelist

Match host *.torproject.org, !host ssh.torproject.org, !host ssh-dal.torproject.org, !host ssh-fsn.torproject.org, !host people.torproject.org, !host gitlab.torproject.org, !exec trusted-network

ProxyJump anarcat@ssh-dal.torproject.org

How to change the main website

The Tor website is managed via its git repository.

It is usually advised to get changes validated via a merge request on the project.

Once changes are merged to the main branch, , if the changes pass validation checks they get deployed automatically to staging.

If after the auto-deploy to staging everything looks as expected, changes can

be deployed to prod by manually launching the CI job deploy prod.

How to change other static websites

A handful of other static websites -- like extra.tp.o, dist.tp.o, and more -- are hosted at several computers for redundancy, and these computers are together called "the www rotation".

How do you edit one of these websites? Let's say you want to edit extra.

-

First you ssh in to

staticiforme(using an ssh jump host if needed) -

Then you make your edits as desired to

/srv/extra-master.torproject.org/htdocs/ -

When you're ready, you run this command to sync your changes to the www rotation:

sudo -u mirroradm static-update-component extra.torproject.org

Example: You want to copy image.png from your Desktop to your blog

post indexed as 2017-01-01-new-blog-post:

scp /home/user/Desktop/image.png staticiforme.torproject.org:/srv/extra-master.torproject.org/htdocs/blog/2017-01-01-new-blog-post/

ssh staticiforme.torproject.org sudo -u mirroradm static-update-component extra.torproject.org

Which sites are static?

The complete list of websites served by the www rotation is not easy to figure out, because we move some of the static sites around from time to time. But you can learn which websites are considered "static", i.e. you can use the above steps to edit them, via:

ssh staticiforme cat /etc/static-components.conf

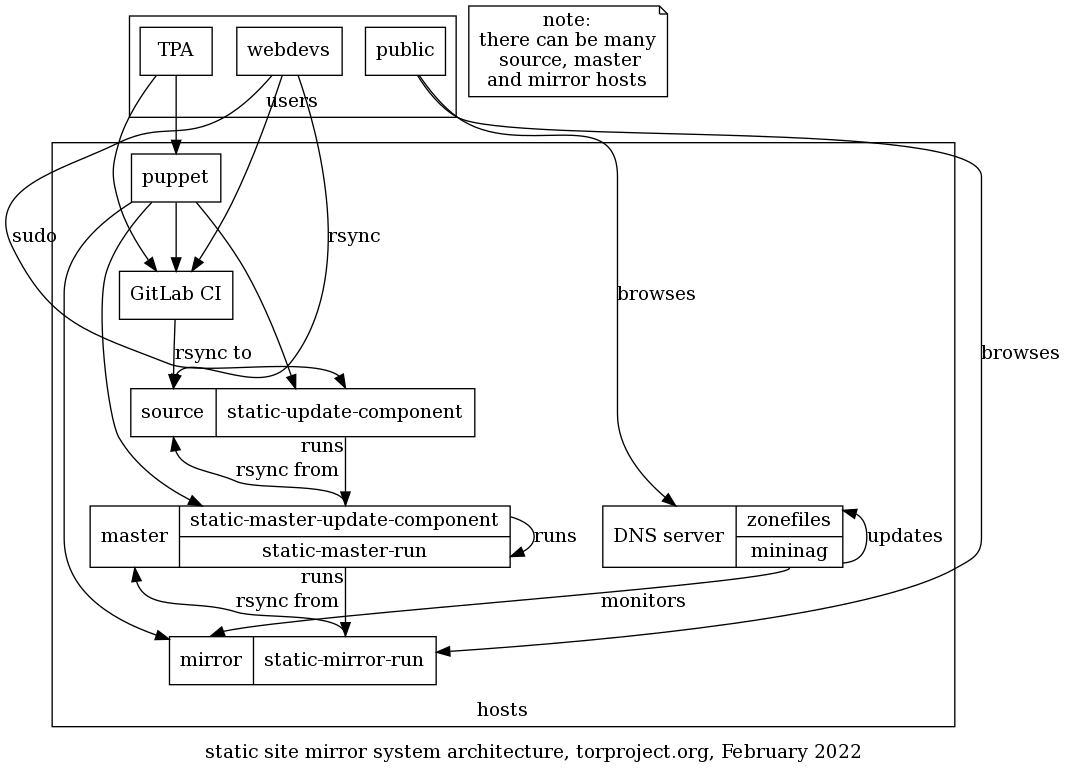

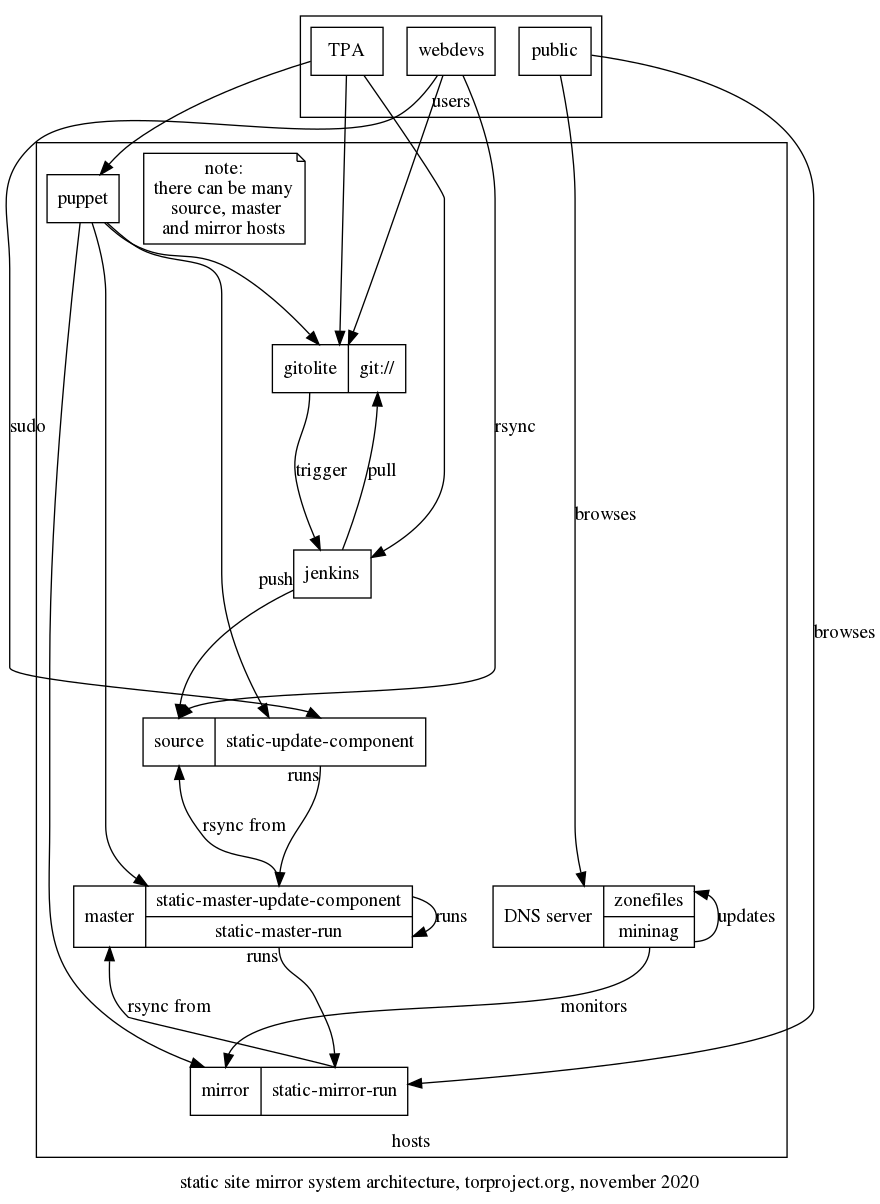

How does this work?

If you're a sysadmin and wondering how that stuff work or do anything back there, look at service/static-component.

SVN accounts

We still use SVN in some places. All public SVN repositories are available at svn.torproject.org. We host our presentations, check.torproject.org, website, and an number of older codebases in it. The most frequently updated directories are the website and presentations. SVN is not tied to LDAP in any way.

SVN Repositories available

The following SVN repositories are available:

- android

- arm

- blossom

- check

- projects

- todo

- torctl

- torflow

- torperf

- translation

- weather

- website

Steps to SVN bliss

-

Open a trac ticket per user account desired.

-

The user needs to pick a username and which repository to access (see list above)

-

SVN access requires output from the following command:

htdigest -c password.tmp "Tor subversion repository" <username> -

The output should be mailed to the subversion service maintainer (See Infrastructure Page on trac) with Trac ticket reference contained in the email.

-

The user will be added and emailed when access is granted.

-

The trac ticket is updated and closed.

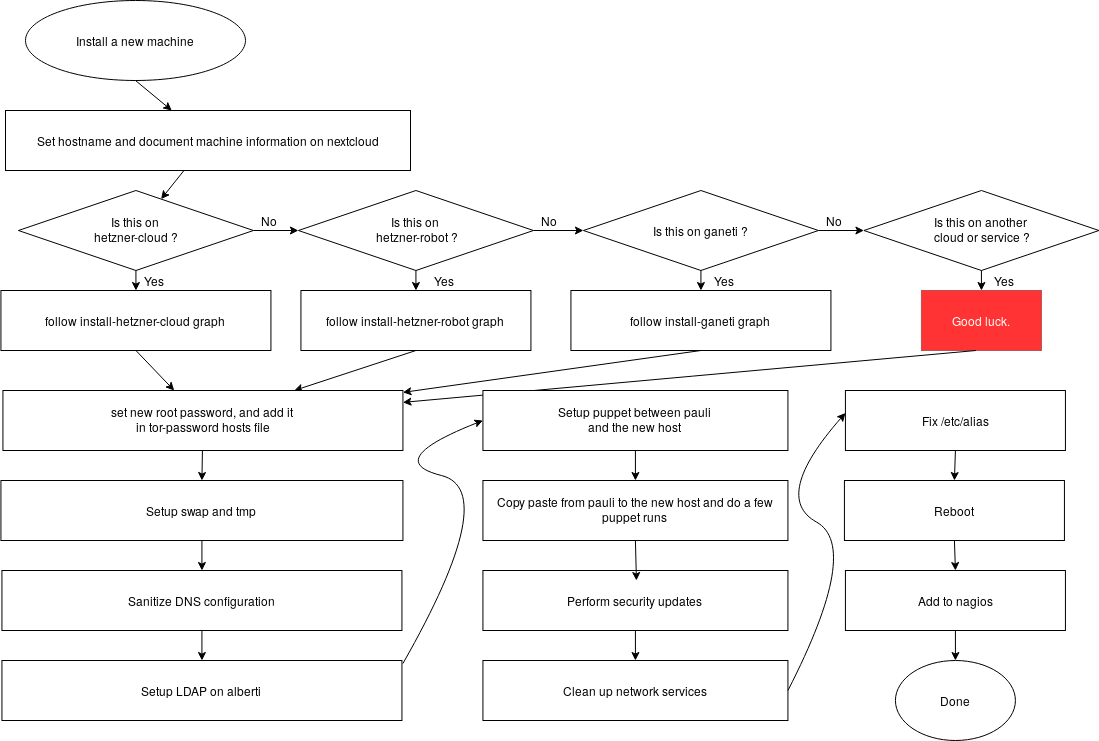

This documentation is primarily aimed at sysadmins and establishes various procedures not necessarily associated with a specific service.

Pages are grouped by some themes to make them easier to find in this page.

Accessing servers:

User access management:

Machine management:

- DRBD

- incident-response

- lvm

- nftables

- raid

- rename-a-host

- retire-a-host

- new-machine-cymru

- new-machine-hetzner-cloud

- new-machine-hetzner-robot

- new-machine

- new-machine-mandos

- new-machine-ovh-cloud

- reboots

- upgrades

Other misc. documentation:

The APUs are neat little devices from PC Engines. We use them as jump hosts and, generally, low-power servers where we need them.

This documentation was written with a APU3D4, some details may vary with other models.

Tutorial

How to

Console access

The APU comes with a DB-9 serial port. You can connect to that port

using, typically, a null modem cable and a serial-to-USB

adapter. Once properly connected, the device will show up as

/dev/ttyUSB0 on Linux. You can connect to it with GNU screen

with:

screen /dev/ttyUSB0 115200

... or with plain cu(1):

cu -l /dev/ttyUSB0 -s 115200

If you fail to connect, PC Engines actually has minimalist but good documentation on the serial port.

BIOS

When booting, you should be able to see the APU's BIOS on the serial console. It looks something like this after a few seconds:

PCEngines apu3

coreboot build 20170302

4080 MB ECC DRAM

SeaBIOS (version rel-1.10.0.1)

Press F10 key now for boot menu

The boot menu then looks something like that:

Select boot device:

1. USB MSC Drive Kingston DataTraveler 3.0

2. SD card SD04G 3796MiB

3. ata0-0: SATA SSD ATA-9 Hard-Disk (111 GiBytes)

4. Payload [memtest]

5. Payload [setup]

Hitting 4 puts you in a Memtest86 memory test (below). The setup screen looks like this:

### PC Engines apu2 setup v4.0.4 ###

Boot order - type letter to move device to top.

a USB 1 / USB 2 SS and HS

b SDCARD

c mSATA

d SATA

e iPXE (disabled)

r Restore boot order defaults

n Network/PXE boot - Currently Disabled

t Serial console - Currently Enabled

l Serial console redirection - Currently Enabled

u USB boot - Currently Enabled

o UART C - Currently Disabled

p UART D - Currently Disabled

x Exit setup without save

s Save configuration and exit

i.e. it basically allows you to change the boot order, enable network booting, disable USB booting, disable the serial console (probably ill-advised), and mess with the other UART ports.

The network boot actually drops you in iPXE which is nice (version 1.0.0+ (f8e167) from 2016) as it allows you to bootstrap one rescue host with another (see the installation section below).

Memory test

The boot menu (F10 then 4) provides a built-in memory test which runs Memtest86 5.01+ and looks something like this:

Memtest86+ 5.01 coreboot 001| AMD GX-412TC SOC

CLK: 998.3MHz (X64 Mode) | Pass 6% ##

L1 Cache: 32K 15126 MB/s | Test 67% ##########################

L2 Cache: 2048K 5016 MB/s | Test #5 [Moving inversions, 8 bit pattern]

L3 Cache: None | Testing: 2048M - 3584M 1536M of 4079M

Memory : 4079M 1524 MB/s | Pattern: dfdfdfdf | Time: 0:03:49

------------------------------------------------------------------------------

Core#: 0 (SMP: Disabled) | CPU Temp | RAM: 666 MHz (DDR3-1333) - BCLK: 100

State: - Running... | 48 C | Timings: CAS 9-9-10-24 @ 64-bit Mode

Cores: 1 Active / 1 Total (Run: All) | Pass: 0 Errors: 0

------------------------------------------------------------------------------

PC Engines APU3

(ESC)exit (c)configuration (SP)scroll_lock (CR)scroll_unlock (l)refresh

Pager playbook

Disaster recovery

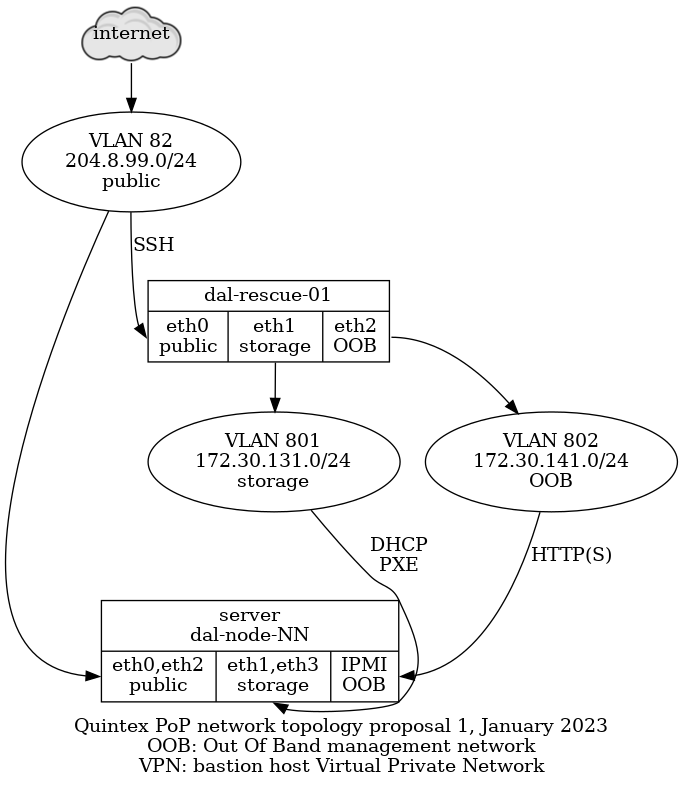

If the server in the datacenter (currently dal-rescue-01, soon to be

replaced by dal-rescue-02) dies, the plan is to ship the

spare. anarcat holds a running copy of the production server at home

for experiments and shipping.

Unfortunately, since Trump implemented tariffs with Canada, shipping has become more complex and we are considering a change in that approach, as shipping has become prohibitively expensive. Out of a depreciated 150$ value, we had to pay about 70$CAD for shipping: 40$CAD for postage with Canada Post, and 30$ for duties. One also has to install an app (Zonos Prepay) to pay for duties.

Next step here is likely to pick an alternative platform (see Other alternatives below) and ship that directly to the datacenter, performing the install with a KVM, serial from another existing jump host, or by remote hands.

See tpo/tpa/team#42438 for examples on what that looked like the last time we did this.

Reference

Installation

The current APUs were ordered directly from the PC Engines shop, specifically the USD section. The build was:

2 apu3d4 144.00 USD 288.00 HTS 8471.5000 TW Weight 470g

APU.3D4 system board 4GB

2 case1d2redu 10.70 USD 21.40 HTS 8473.3000 CN Weight 502g

Enclosure 3 LAN, red, USB

2 ac12vus2 4.40 USD 8.80 HTS 8504.4000 KH Weight 266g

AC adapter 12V US plug for IT equipment

2 msata120c 15.50 USD 31.00 HTS 8523.5100 CN Weight 14g

SSD M-Sata 120GB TLC

2 sd4b 6.90 USD 13.80 HTS 8523.5100 TW Weight 4g

SD card 4GB pSLC Phison

2 assy2 7.50 USD 15.00 HTS 8471.5000 CH Weight 120g

assembly + box

Shipping TBD !!! USD 0.00 Weight 1376g

VAT USD 0.00

Total USD 378.00

Note how the price is for two complete models. The devices shipped promptly; it was basically shipped in 3 days, but customs added an additional day of delay over the weekend, which led to a 6 days (4 business days) shipping time.

One of the machine was connected over serial (see above) and booted with a GRML "96" (64 and 32 bit) image over USB. Booting GRML from USB is tricky, however, because you need to switch from 115200 to 9600 once grub finishes loading, as GRML still defaults to 9600 baud instead of 115200. It may be possible to tweak the GRUB commandline to change the speed, but since it's in the middle of the kernel commandline and that the serial console editing capabilities are limited, it's actually pretty hard to get there.

The other box was chain-loaded with iPXE from the first box, as a

stress-test. This was done by enabling the network boot in the BIOS

(F10 to enter the BIOS in the serial console, then

5 to enter setup and n to enable network boot

and s to save). Then hit n to enable network

boot and choose "iPXE shell" when prompted. Assuming both hosts are

connected over their eth1 storage interfaces, you should then do:

iPXE> dhcp net1

iPXE> chain autoexec.ipxe

This will drop you in another DHCP sequence, which will try to

configure each interface. You can control-c to skip net0

and then the net1 interface will self-configure and chain-load the

kernel and GRML. Because the autoexec.ipxe stores the kernel

parameters, it will load the proper serial console settings and

doesn't suffer from the 9600 bug mentioned earlier.

From there, SSH was setup and key was added. We had DHCP in the lab so we just reused that IP configuration.

service ssh restart

cat > ~/.ssh/authorized_keys

...

Then the automated installer was fired:

./install -H root@192.168.0.145 \

--fingerprint 3a:4d:dd:91:79:af:4e:c4:17:e5:c8:d2:d6:b5:92:51 \

hetzner-robot \

--fqdn=dal-rescue-01.torproject.org \

--fai-disk-config=installer/disk-config/dal-rescue \

--package-list=installer/packages \

--post-scripts-dir=installer/post-scripts/ \

--ipv4-address 204.8.99.100 \

--ipv4-subnet 24 \

--ipv4-gateway 204.8.99.1

WARNING: the dal-rescue disk configuration is incorrect. The 120GB

disk gets partitioned incorrectly, as its RAID-1 partition is bigger

than the smaller SD card.

Note that IP configuration was actually performed manually on the node, the above is just an example of the IP address used by the box.

Next, the new-machine procedure was followed.

Finally, the following steps need to be performed to populate /srv:

-

GRML image, note that we won't be using the

grml.ipxefile, so:apt install debian-keyring && wget https://download.grml.org/grml64-small_2022.11.iso && wget https://download.grml.org/grml64-small_2022.11.iso.asc && gpg --verify --keyring /usr/share/keyrings/debian-keyring.gpg grml64-small_2022.11.iso.asc && echo extracting vmlinuz and initrd from ISO... && mount grml64-small_2022.11.iso /mnt -o loop && cp /mnt/boot/grml64small/* . && umount /mnt && ln grml64-small_2022.11.iso grml.iso -

build the iPXE image but without the floppy stuff, basically:

apt install build-essential &&

git clone git://git.ipxe.org/ipxe.git &&

cd ipxe/src &&

mkdir config/local/tpa/ &&

cat > config/local/tpa/general.h <<EOF

#define DOWNLOAD_PROTO_HTTPS /* Secure Hypertext Transfer Protocol */

#undef NET_PROTO_STP /* Spanning Tree protocol */

#undef NET_PROTO_LACP /* Link Aggregation control protocol */

#undef NET_PROTO_EAPOL /* EAP over LAN protocol */

#undef CRYPTO_80211_WEP /* WEP encryption (deprecated and insecure!) */

#undef CRYPTO_80211_WPA /* WPA Personal, authenticating with passphrase */

#undef CRYPTO_80211_WPA2 /* Add support for stronger WPA cryptography */

#define NSLOOKUP_CMD /* DNS resolving command */

#define TIME_CMD /* Time commands */

#define REBOOT_CMD /* Reboot command */

#define POWEROFF_CMD /* Power off command */

#define PING_CMD /* Ping command */

#define IPSTAT_CMD /* IP statistics commands */

#define NTP_CMD /* NTP commands */

#define CERT_CMD /* Certificate management commands */

EOF

make -j4 CONFIG=tpa bin-x86_64-efi/ipxe.efi bin-x86_64-pcbios/undionly.kpxe

-

copy the iPXE files in

/srv/tftp:cp bin-x86_64-efi/ipxe.efi bin-x86_64-pcbios/undionly.kpxe /srv/tftp/ -

create a

/srv/tftp/autoexec.ipxe:

#!ipxe

dhcp

kernel http://172.30.131.1/vmlinuz

initrd http://172.30.131.1/initrd.img

initrd http://172.30.131.1/grml.iso /grml.iso

imgargs vmlinuz initrd=initrd.magic boot=live config fromiso=/grml.iso live-media-path=/live/grml64-small noprompt noquick noswap console=tty0 console=ttyS1,115200n8

boot

Upgrades

SLA

Design and architecture

Services

Storage

The APUs we have setup are using two disks:

- a SD card (previously generic 4GB, now 128GB Samsung PRO Plus MicroSD XC I, U3 A2 V30 micro-SD)

- a mSATA 120GB SSD drive which failed in dal-rescue-01 and was replaced with a Transcend 128GB drive, anarcat has a spare

Queues

Interfaces

Serial console

The APU should provide a serial console access over the DB-9 serial port, standard 115200 baud. The install is configured to offer the bootloader and a login prompt over the serial console, and a basic BIOS is also available.

LEDs

The APU has no graphical interface (only serial, see above), but there are LEDs in the front that have been configured from Puppet to make systemd light them up in a certain way.

From left to right, when looking at the front panel of the APU (not the one with the power outlets and RJ-45 jacks):

- The first LED lights up when the machine boots, and should be on

when the LUKS prompt waits. then it briefly turns off when the

kernel module loads and almost immediately turns back on when

filesystems are mounted (

DefaultDependencies=noandAfter=local-fs.target) - The second LED lights up when systemd has booted and has quieted

(

After=multi-user.targetandType=idle) - The third LED should blink according to the "activity" trigger which is defined in ledtrig_activity kernel module

Network

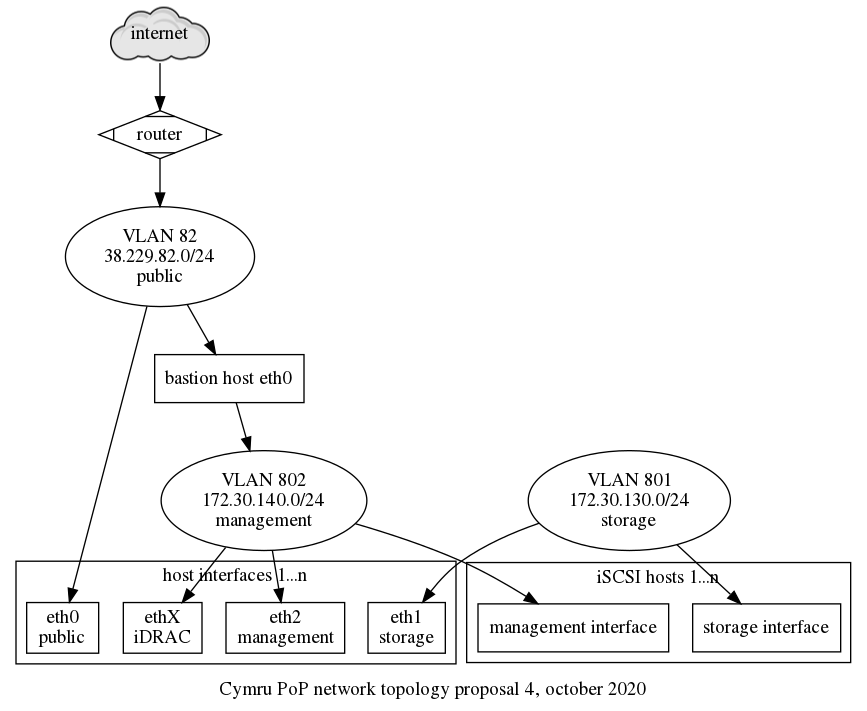

The three network ports should be labeled according to which VLAN they are supposed to be configured for, see the Quintex network layout for details on that configuration.

From left to right, when looking at the back panel of the APU (the one with the network ports, after the DB-9 serial port):

-

eth0 public: public network interface, to be hooked up to thepublicVLAN, mapped toeth0in Linux -

eth1 storage: private network interface, to be hooked up to thestorageVLAN and where DHCP and TFTP is offered, mapped toeth1in Linux -

eth2 OOB: private network interface, to be hooked up to theOOB("Out Of Band" management) VLAN, to allow operators to access the OOB interfaces of the other servers

Authentication

Implementation

Related services

Issues

There is no issue tracker specifically for this project, File or search for issues in the team issue tracker with the label ~Foo.

Maintainer

Users

Upstream

Monitoring and metrics

Tests

Logs

Backups

Other documentation

Discussion

Overview

Security and risk assessment

Technical debt and next steps

Proposed Solution

Other alternatives

APU hardware

We also considered a full 1U case but that seemed really costly. We have also considered a HDD enclosure but that didn't seem necessary either.

APU EOL and alternatives

As of 2023-04-18, the PC Engines website has a stronger EOL page that explicitly states that "The end is near!" and that:

Despite having used considerable quantities of AMD processors and Intel NICs, we don't get adequate design support for new projects. In addition, the x86 silicon currently offered is not very appealing for our niche of passively cooled boards. After about 20 years of WRAP, ALIX and APU, it is time for me to move on to different things.

It therefore seems unlikely that new PC Engines product will be made in the future, and that platform should be considered dead.

In our initial research (tpo/tpa/team#41058) we found two other options (the SolidRun and Turris, below), but since then we've expanded the search and we're keeping a list of alternatives here.

The specification is, must have:

- small (should fit in a 1U)

- low power (10-50W max)

- serial port or keyboard and monitor support

- at least three network ports

- 3-4GB storage for system (dal-rescue-02 uses 2.1GB as of this writing)

- 1-5GB storage for system images (dal-rescue-02 uses 1GB)

Nice to have:

- faster than the APU3 (AMD GX-412TC SOC 600MHz)

- rack-mountable

- coreboot

- "open hardware"

- 12-24 network ports (yes, that means it's a switch, and that we don't need an extra OOB switch)

Other possibilities:

- SolidRun Honeycomb: can fit two ARM servers in a 1U case, SFP ports, 64GB RAM, 16-core NXP 2GHz, a bit overkill

- Turris Shield: SOHO firewall appliance, not sure about Debian compatibility

- Qotom: rugged devices, has a 1U form factor, no coreboot, no price listing

- Protectli: rugged, coreboot, fanless, 2-6 2.5gbps NICs, Intel quad core, 8-64GB RAM, DP port option

- OPNsense: "network appliances", rackmountable, costly (700$+)

- Fitlet: really rugged, miniature, fanless, lock-in power input, 4x 2.5gbps, 2 mini-HDMI, serial port, quad core Intel Atom, mSATA SSD, coreboot, 400$ without RAM (DDR3L-1600)

- Ten64: crowdfunding project, shipping, 8 gigabit ports, 2 SFP, 8 core ARM, 10W, up to 32GB DDR SO-DIMM, 256MB onboard flash, 700$ before RAM 2xNVMe, mini PCIe for wifi, LTE, SATA, SIM tray, 700$USD before RAM

- NUCs like the Gigabyte's Brix, Beelink could be an option as well, no coreboot, slightly thicker (more than 1U?)

- there's of course a whole cornucopia of SBC (Single Board Computers) out there, e.g. Minnowboard, EspressoBIN, Banana PI, MACCHIATObin, O-DROID, Olimex, Pine64, protectli, UP, and many more (see hackboards.com for a database and this HN discussion for other comments)

- see also this list from anarcat

Update: anarcat and ahf have both good experience running Protectli machines in their home labs, look like good candidates. See also tpo/tpa/team#41666. After talking with the Protectli folks, it seems the options are:

- FW4B: 4-port gbit, Intel Celeron® J3160 Quad Core 1.6 GHz / 2.24 GHz, AES-SNI, 4-8GB RAM, 120GB mSATA SSD, 345$CAD, no room for an extra drive, although there's a SATA connector so a SATA DOM module could be used

- V1410: 4-port 2.5Gbit, Intel N5105 Quad Core 2.0 GHz / 2.9 GHz, 8GB onboard RAM, 32gb eMMC, M.2 NVMe slot, 418$CAD with a 256GB NVMe drive

Neither support our traditional "everything on RAID-1" model. We might

want to reconsider for such "appliances" and instead minimize the

amount of writes on the system (since this is, after all, a mostly

static system) by having all writes to /tmp and the system mostly

read-only except for upgrades. Then the eMMC becomes a rescue system

that's not on RAID-1 but periodically synced somehow.

Or we just treat the eMMC as failible and keep a spare machine, which reduces the unit cost to 370$.

This procedure documents various benchmarking procedures in use inside TPA.

HTTP load testing

Those procedures were quickly established to compare various caching software as part of the cache service setup.

Common procedure

-

punch a hole in the firewall to allow the test server to access tested server, in case it is not public yet

iptables -I INPUT -s 78.47.61.104 -j ACCEPT ip6tables -I INPUT -s 2a01:4f8:c010:25ff::1 -j ACCEPT -

point the test site (e.g.

blog.torproject.org) to the tested server on the test server, in/etc/hosts:116.202.120.172 blog.torproject.org 2a01:4f8:fff0:4f:266:37ff:fe26:d6e1 blog.torproject.org -

disable Puppet on the test server:

puppet agent --disable 'benchmarking requires /etc/hosts override' -

launch the benchmark on the test server

Siege

Siege configuration sample:

verbose = false

fullurl = true

concurrent = 100

time = 2M

url = http://www.example.com/

delay = 1

internet = false

benchmark = true

Might require this, which might work only with varnish:

proxy-host = 209.44.112.101

proxy-port = 80

Alternative is to hack /etc/hosts.

apachebench

Classic commandline:

ab2 -n 1000 -c 100 -X cache01.torproject.org https://example.com/

-X also doesn't work with ATS, modify /etc/hosts instead.

bombardier

We tested bombardier as an alternative to go-wrk in previous

benchmarks. The goal of using go-wrk was that it supported HTTP/2

(while wrk didn't), but go-wrk had performance issues, so we went

with the next best (and similar) thing.

Unfortunately, the bombardier package in Debian is not the HTTP benchmarking tool but a commandline game. It's still possible to install it in Debian with:

export GOPATH=$HOME/go

apt install golang

go get -v github.com/codesenberg/bombardier

Then running the benchmark is as simple as:

./go/bin/bombardier --duration=2m --latencies https://blog.torproject.org/

wrk

Note that wrk works similarly to bombardier, sampled above, and has

the advantage of being already packaged in Debian. Simple cheat sheet:

sudo apt install wrk

echo "10.0.0.0 target.example.com" >> /etc/hosts

wrk --latency -c 100 --duration 2m https://target.example.com/

The main disadvantage is that it doesn't (seem to) support HTTP/2 or similarly advanced protocols.

Other tools

Siege has trouble going above ~100 concurrent clients because of its design (and ulimit) limitations. Its interactive features are also limited, here's a set of interesting alternatives:

| Project | Lang | Proto | Features | Notes | Debian |

|---|---|---|---|---|---|

| ali | golang | HTTP/2 | real-time graph, duration, mouse support | unsearchable name | no |

| bombardier | golang | HTTP/2 | better performance than siege in my 2017 tests | RFP | |

| boom | Python | HTTP/2 | duration | rewrite of apachebench, unsearchable name | no |

| drill | Rust | scriptable, delay, stats, dynamic | inspired by JMeter and friends | no | |

| go-wrk | golang | no duration | rewrite of wrk, performance issues in my 2017 tests | no | |

| hey | golang | rewrite of apachebench, similar to boom, unsearchable name | yes | ||

| Jmeter | Java | interactive, session replay | yes | ||

| k6.io | JMeter rewrite with "cloud" SaaS | no | |||

| Locust | distributed, interactive behavior | yes | |||

| oha | Rust | TUI | inspired by hey | no | |

| Tsung | Erlang | multi | distributed | yes | |

| wrk | C | multithreaded, epoll, Lua scriptable | yes |

Note that the Proto(col) and Features columns are not exhaustive: a tool might support (say) HTTPS, HTTP/2, or HTTP/3 even if it doesn't explicitly mention it, although it's unlikely.

It should be noted that very few (if any) benchmarking tools seem to

support HTTP/3 (or even QUIC) at this point. Even HTTP/2 support is

spotty: for example, while bombardier supports HTTP/2, it only does so

with the slower net/http library at the time of writing (2021). It's

unclear how many (if any) other projects to support HTTP/2 as well.

More tools, unreviewed:

Builds can be performed on dixie.torproject.org.

Uploads must be go to palmeri.torproject.org.

Preliminary setup

In ~/.ssh/config:

Host dixie.torproject.org

ProxyCommand ssh -4 perdulce.torproject.org -W %h:%p

In ~/.dput.cf:

[tor]

login = *

fqdn = palmeri.torproject.org

method = scp

incoming = /srv/deb.torproject.org/incoming

Currently available distributions

- Debian:

lenny-backportexperimental-lenny-backportsqueeze-backportexperimental-squeeze-backportwheezy-backportexperimental-wheezy-backportunstableexperimental

- Ubuntu:

hardy-backportlucid-backportexperimental-lucid-backportnatty-backportexperimental-natty-backportoneiric-backportexperimental-oneiric-backportprecise-backportexperimental-precise-backportquantal-backportexperimental-quantal-backportraring-backportexperimental-raring-backport

Create source packages

Source packages must be created for the right distributions.

Helper scripts:

Build packages

Upload source packages to dixie:

dcmd rsync -v *.dsc dixie.torproject.org:

Build arch any packages:

ssh dixie.torproject.org

for i in *.dsc; do ~weasel/bin/sbuild-stuff $i && linux32 ~weasel/bin/sbuild-stuff --binary-only $i || break; done

Or build arch all packages:

ssh dixie.torproject.org

for i in *.dsc; do ~weasel/bin/sbuild-stuff $i || break; done

Packages with dependencies in deb.torproject.org must be built using

$suite-debtpo-$arch-sbuild, e.g. by running:

DIST=wheezy-debtpo ~weasel/bin/sbuild-stuff $DSC

Retrieve build results:

rsync -v $(ssh dixie.torproject.org dcmd '*.changes' | sed -e 's/^/dixie.torproject.org:/') .

Upload first package with source

Pick the first changes file and stick the source in:

changestool $CHANGES_FILE includeallsources

Sign it:

debsign $CHANGES_FILE

Upload:

dput tor $CHANGES_FILE

Start a first dinstall:

ssh -t palmeri.torproject.org sudo -u tordeb /srv/deb.torproject.org/bin/dinstall

Move changes file out of the way:

dcmd mv $CHANGES_FILE archives/

Upload other builds

Sign the remaining changes files:

debsign *.changes

Upload them:

dput tor *.changes

Run dinstall:

ssh -t palmeri.torproject.org sudo -u tordeb /srv/deb.torproject.org/bin/dinstall

Archive remaining build products:

dcmd mv *.changes archives/

Uploading admin packages

There is a separate Debian archive, on db.torproject.org, which can

be used to upload packages specifically designed to run on

torproject.org infrastructure. The following .dput.cf should allow

you to upload built packages to the server, provided you have the

required accesses:

[tpo-admin]

fqdn = db.torproject.org

incoming = /srv/db.torproject.org/ftp-archive/archive/pool/tpo-all/

method = sftp

post_upload_command = ssh root@db.torproject.org make -C /srv/db.torproject.org/ftp-archive

This might require fixing some permissions. Do a chmod g+w on the

broken directories if this happens. See also ticket 34371 for

plans to turn this into a properly managed Debian archive.

- Configuration

- Creating a new user

- Creating a role

- Sudo configuration

- Update a user's GPG key

- Other documentation

This document explains how to create new shell (and email) accounts. See also doc/accounts to evaluate new account requests.

Note that this documentation needs work, as it overlaps with user-facing user management procedures (doc/accounts), see issue 40129.

Configuration

This should be done only once.

git clone db.torproject.org:/srv/db.torproject.org/keyrings/keyring.git account-keyring

It downloads the git repository that manages the OpenPGP keyring. This keyring is essential as it allows users to interact with the LDAP database securely to perform password changes and is also used to send the initial password for new accounts.

When cloning, you may get the following message (see tpo/tpa/team#41785):

fatal: detected dubious ownership in repository at '/srv/db.torproject.org/keyrings/keyring.git'

If this happens, you need to run the following command as your user on

db.torproject.org:

git config --global --add safe.directory /srv/db.torproject.org/keyrings/keyring.git

Creating a new user

This procedure can be used to create a real account for a human being. If this is for a machine or another automated thing, create a role account (see below).

To create a new user, specific information need to be provided by the requester, as detailed in doc/accounts.

The short version is:

-

Import the provided key to your keyring. That is necessary for the script in the next point to work.

-

Verify the provided OpenPGP key

It should be signed by a trusted key in the keyring or in a message signed by a trusted key. See doc/accounts when unsure.

-

Add the OpenPGP key to the

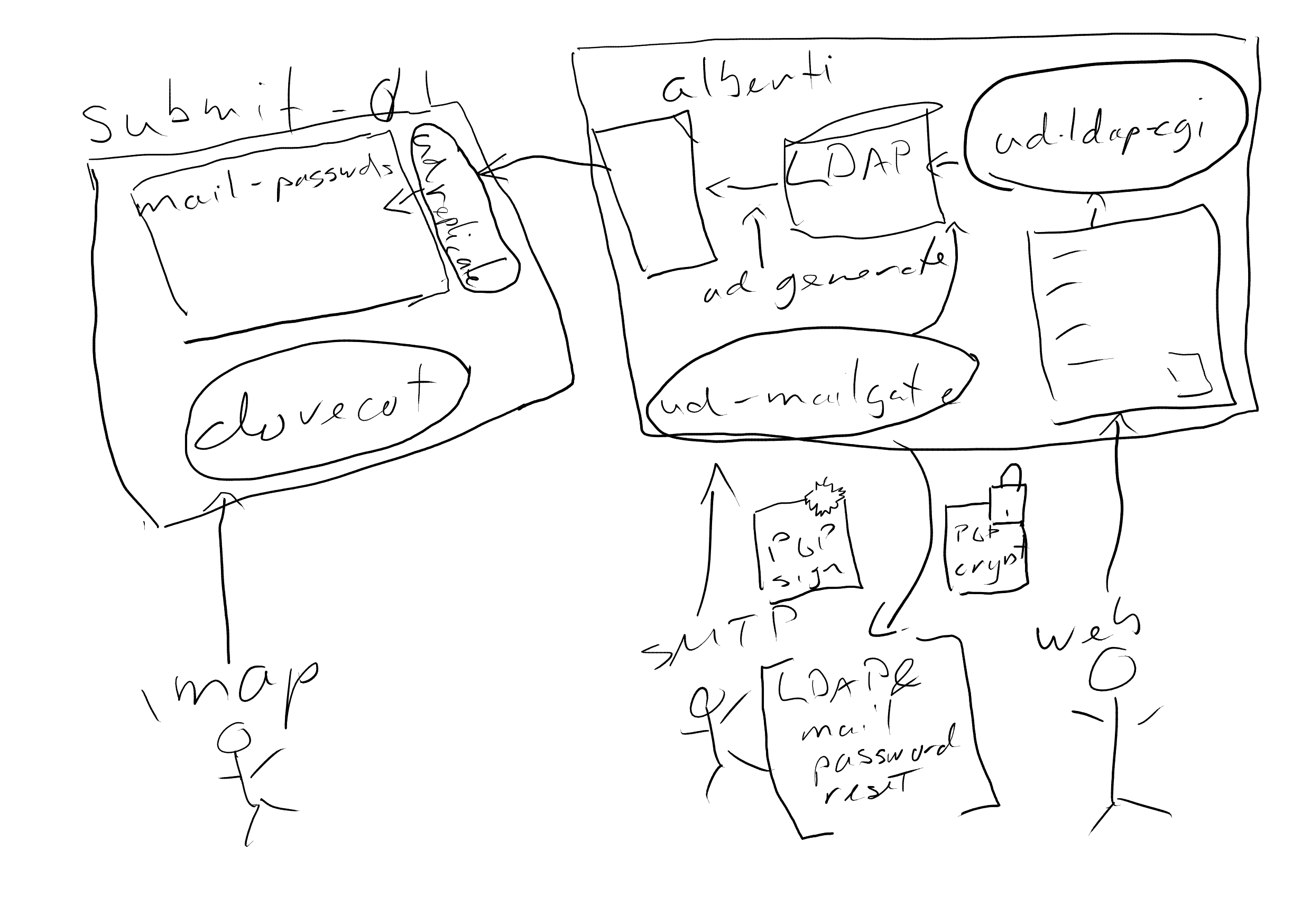

account-keyring.gitrepository and create the LDAP account:FINGERPRINT=0123456789ABCDEF0123456789ABCDEF01234567 && NEW_USER=alice && REQUESTER="bob in ticket #..." && ./NEW "$FINGERPRINT" "$NEW_USER" && git add torproject-keyring/"${NEW_USER}-${FINGERPRINT}.gpg" && git commit -m"new user ${NEW_USER} requested by ${REQUESTER}" && git push && ssh -tt $USER@alberti.torproject.org "ud-useradd -n && sudo -u sshdist ud-generate && sudo -H ud-replicate"

The last line will create the user on the LDAP server. See below for detailed information on that magic instruction line, including troubleshooting.

Note that $USER, in the above, shouldn't be explicitly expanded

unless your local user is different from your alberti user. In my

case, $USER, locally, is anarcat and that is how I login to

alberti as well.

Notice that when prompted for whom to add (a GPG search), enter the

full $FINGERPRINT verified above

What followed are detailed, step-by-step instructions, to be performed

after the key was added to the account-keyring.git repository (up

to the git push step above).

on the LDAP server

Those instructions are a copy of the last step of the above instructions, provided to clarify what each step does. Do not follow this procedure and instead follow the above.

The LDAP server is currently alberti. Those steps are supposed to be

ran as a regular user with LDAP write access.

-

create the user:

ud-useradd -nThis command asks a bunch of questions interactively that have good defaults, mostly taken from the OpenPGP key material, but it's important to review them anyways. in particular:

-

when prompted for whom to add (

a GPG search), enter the full$FINGERPRINTverified above -

the email forward is likely to be incorrect if the key has multiple email address as UIDs

-

the user might already be present in the Postfix alias file (

tor-puppet/modules/postfix/files/virtual) - in that case, use that email as theEmail forwarding addressif present and remove it from Puppet

-

-

synchronize the change:

sudo -u sshdist ud-generate && sudo -H ud-replicate

on other servers

This step is optional and can be used to force replication of the change to another server manually.

-

synchronize the change:

sudo -H ud-replicate -

run puppet:

sudo puppet agent -t

Creating a user without a PGP key

In most cases we want to use the person's PGP key to associate with their new LDAP account, but in some cases it may be difficult to get a person to generate a PGP key (and most importantly, keep managing that key effectively afterwards) and we might still want to grant the person an email account.

For those cases, it's possible to create an LDAP account without associating it to a PGP key.

First, generate a password and note it down somewhere safe temporarily. Then generate a hash for that password and noted it down. If you don't have this command on your computer, you can run that on alberti:

mkpasswd -m bcrypt-a

On alberti, find a free user ID with fab user.list-gaps (more information on

that command in the creating a role section)

Then, on alberti, connect to ldapvi and at the end of the file add something

like the following. Make sure to modify uid=[...] and all UID and GID numbers

and then the user's cn and sn fields to values that make sense for your case

and replace the value of mailPassword with the password hash you noted down

earlier. Keep the userPassword as-is since it will tell LDAP to lock the LDAP

account:

add gid=exampleuser,ou=users,dc=torproject,dc=org

gid: exampleuser

gidNumber: 15xx

objectClass: top

objectClass: debianGroup

add uid=exampleuser,ou=users,dc=torproject,dc=org

uid: exampleuser

objectClass: top

objectClass: inetOrgPerson

objectClass: debianAccount

objectClass: shadowAccount

objectClass: debianDeveloper

uidNumber: 15xx

gidNumber: 15xx

gecos: exampleuser,,,,

cn: Example

sn: User

userPassword: {crypt}$LK$

mailPassword: <REDACTED>

emailForward: <address>

loginShell: /bin/bash

mailCallout: FALSE

mailContentInspectionAction: reject

mailGreylisting: FALSE

mailDefaultOptions: FALSE

Save and exit and you should get prompted about adding two entries.

Lastly, refresh and resync the user database:

- On alberti:

sudo -u sshdist ud-generate && sudo -H ud-replicate - On submit-01 as root:

ud-replicate

The final step is then to contact the person on Signal and send them the password in a disappearing message.

troubleshooting

If the ud-useradd command fails with this horrible backtrace:

Updating LDAP directory..Traceback (most recent call last):

File "/usr/bin/ud-useradd", line 360, in <module>

lc.add_s(Dn, Details)

File "/usr/lib/python3/dist-packages/ldap/ldapobject.py", line 236, in add_s

return self.add_ext_s(dn,modlist,None,None)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/ldap/ldapobject.py", line 222, in add_ext_s

resp_type, resp_data, resp_msgid, resp_ctrls = self.result3(msgid,all=1,timeout=self.timeout)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/ldap/ldapobject.py", line 543, in result3

resp_type, resp_data, resp_msgid, decoded_resp_ctrls, retoid, retval = self.result4(

^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/ldap/ldapobject.py", line 553, in result4

ldap_result = self._ldap_call(self._l.result4,msgid,all,timeout,add_ctrls,add_intermediates,add_extop)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/ldap/ldapobject.py", line 128, in _ldap_call

result = func(*args,**kwargs)

^^^^^^^^^^^^^^^^^^^^

ldap.INVALID_SYNTAX: {'msgtype': 105, 'msgid': 6, 'result': 21, 'desc': 'Invalid syntax', 'ctrls': [], 'info': 'sn: value #0 invalid per syntax'}

... it's because you didn't fill the form properly. In this case, the

sn field ("Last name" in the form) was empty. If you don't have a

second name, just reuse the first name.

Creating a role

A "role" account is like a normal user, except it's for machines or services, not real people. It's useful to run different services with different privileges and isolation.

Here's how to create a role account:

-

Do not use

ud-groupaddandud-roleadd. They are partly broken. -

Run

fab user.list-gapsfrom a clone of thefabric-tasksrepository on alberti.tpo to find an unuseduidNumber/gidNumberpair.- Make sure the numbers match. If you are unsure, find the highest

uidNumber/gidNumberpair, increment that and use it as a number. You must absolutely make sure the number is not already in use. - the fabric task connects directly to ldap, which is firewalled from the exterior, so you won't be able to run the task from your computer.

- Make sure the numbers match. If you are unsure, find the highest

-

On LDAP host (currently alberti.tpo), as a user with LDAP write access, do:

ldapvi -ZZ --encoding=ASCII --ldap-conf -h db.torproject.org -D uid=${USER},ou=users,dc=torproject,dc=org -

Create a new

grouprole for the new account:- Copy-paste a previous

gidthat is also adebianGroup - Change the first word of the copy-pasted block to

addinstead of the integer - Change the

cn(first line) to the new group name - Change the

gid:field (last line) to the new group name - Set the

gidNumberto the number found in step 2

- Copy-paste a previous

-

Create the actual

userrole:- Copy-paste a previous

uidrole entry (with aobjectClass: debianRoleAccount). - Change the first word of the copy-pasted block to

addinstead of the integer - Change the

uid=,uid:,gecos:andcn:lines. - Set the

gidNumberanduidNumberto the number found in step 2 - If you need to set a mail password you can generate a blowcrypt password

with python (search for example of how to do this). Change the hash

identifier to

$2y$instead of$2b$.

- Copy-paste a previous

-

Add the role to the right host:

- Add a

allowedGroups: NEW-GROUPline to host entries that should have this role account deployed. - If the role account will only be used for sending out email by connecting to submission.torproject.org, the account does not need to be added to a host.

- Add a

-

Save the file, and accept the changes

-

propagate the changes from the LDAP host:

sudo -u sshdist ud-generate && sudo -H ud-replicate -

(sometimes) create the home directory on the server, in Puppet:

file { '/home/bridgescan': ensure => 'directory', mode => '0755', owner => 'bridgescan', group => 'bridgescan'; }

Sometimes a role account is made to start services, see the doc/services page for instructions on how to do that.

Sudo configuration

A user will often need to more permissions than its regular scope. For example, a user might need to be able to access a specific role account, as above, or run certain commands as root.

We have sudo configuration that enable us to give piecemeal accesses

like this. We often give accesses to groups instead of specific

users for easier maintenance.

Entries should be added by declaring a sudo::conf resource in the

relevant profile class in Puppet. For example:

sudo::conf { 'onbasca':

content => @(EOT)

# This file is managed by Puppet.

%onbasca ALL=(onbasca) ALL

| EOT

}

An alternative to this which avoids the need to create a profile class

containing a single sudo::conf resource is to add the configuration to

Hiera data. The equivalent for the above would be placing this YAML

snippet at the role (preferably) or node hierarchy:

profile::sudo::configs:

onbasca:

content: |

# This file is managed by Puppet.

%onbasca ALL=(onbasca) ALL

Sudo primer

As a reminder, the sudoers file syntax can be distilled to this:

FROMWHO HOST=(TOWHO) COMMAND

For example, this allows the group wheel (FROMWHO) to run the

service apache reload COMMAND as root (TOWHO) on the HOST

example:

%wheel example=(root) service apache reload

The HOST, TOWHO and COMMAND entries can be set to ALL. Aliases

can also be defined and many more keywords. In particular, the

NOPASSWD: prefix before a COMMAND will allow users to sudo

without entering their password.

Granting access to a role account

That being said, you can simply grant access to a role account by

adding users in the role account's group (through LDAP) then adding a

line like this in the sudoers file:

%roleGroup example=(roleAccount) ALL

Multiple role accounts can be specified. This is a real-world example

of the users in the bridgedb group having full access to the

bridgedb and bridgescan user accounts:

%bridgedb polyanthum=(bridgedb,bridgescan) ALL

Another real-world example, where members of the %metrics group can

run two different commands, without password, on the STATICMASTER

group of machines, as the mirroradm user:

%metrics STATICMASTER=(mirroradm) NOPASSWD: /usr/local/bin/static-master-update-component onionperf.torproject.org, /usr/local/bin/static-update-component onionperf.torproject.org

Update a user's GPG key

The account-keyring repository contains an update script ./UPDATE which takes

the ldap username as argument and automatically updates the key.

If you /change/ a user's key (to a new primary key), you also need to update

the user's keyFingerPrint attribute in LDAP.

After updating a key in the repository, the changes must be pushed to the remote hosted on the LDAP server.

Other documentation

Note that a lot more documentation about how to manage users is available in the LDAP documentation.

Cumin

Cumin is a tool to operate arbitrary shell commands on service/puppet hosts that match a certain criteria. It can match classes, facts and other things stored in the PuppetDB.

It is useful to do adhoc or emergency changes on a bunch of machines at once. It is especially useful to run Puppet itself on multiple machines at once to do progressive deployments.

It should not be used as a replacement for Puppet itself: most configuration on server should not be done manually and should instead be done in Puppet manifests so they can be reproduced and documented.

- Installation

- Avoiding spurious connection errors by limiting batch size

- Example commands

- Mangling host lists for Cumin consumption

- Disabling touch confirmation

- Discussion

Installation

Debian package

cumin has been available through debian archives since boorkworm, so you can simply:

sudo apt install cumin

If your distro does not have packages available, you can also install with a python virtualenv. See the section below for how to achieve this.

Initial configuration

cumin is relatively useless for us if it doesn't poke puppetdb to resolve

which hosts to run commands on. So we want to get it to talk to puppetdb. Also,

it gets pretty annoying to have to manually setup the ssh tunnel after getting

an error printed out by cumin, so we can get the tunnel setup automatically.

Once cumin is installed drop the following configuration in

~/.config/cumin/config.yaml:

transport: clustershell

puppetdb:

host: localhost

scheme: http

port: 6785

api_version: 4 # Supported versions are v3 and v4. If not specified, v4 will be used.

clustershell:

ssh_options:

- '-o User=root'

log_file: cumin.log

default_backend: puppetdb

Now you can simply use an alias like the following:

alias cumin="cumin --config ~/.config/cumin/config.yaml"

while making sure that you setup an ssh tunnel manually before calling cumin like the following:

ssh -L6785:localhost:8080 puppetdb-01.torproject.org

Or instead of the alias and the ssh command, you can try setting up an

automatic tunnel upon calling cumin. See the following section to set that

up.

Automatic tunneling to puppetdb with bash + systemd unit

This trick makes sure that you never forget to setup the ssh tunnel to puppedb

before running cumin. This section will replace cumin by a bash function,

so if you created a simple alias like mentioned in the previous section, you

should start by getting rid of that alias. Lastly, this trick requires nc in

order to verify if the tunnel port is open so, install it with:

sudo apt install nc

To get the automatic tunnel, we'll create a systemd unit that can bring the

tunnel up for us. Create the file

~/.config/systemd/user/puppetdb-tunnel@.service, making sure to create the

missing directories in the path:

[Unit]

Description=Setup port forward to puppetdb

After=network.target

[Service]

ExecStart=-/usr/bin/ssh -W localhost:8080 puppetdb-01.torproject.org

StandardInput=socket

StandardError=journal

Environment=SSH_AUTH_SOCK=%t/gnupg/S.gpg-agent.ssh

The Environment variable is necessary for the ssh command to be able

to request the key from your YubiKey, this may vary according to your

authentication system. It's only there because systemd might not have

the right variables from your environment, depending on how it's started.

And you'll need the following for socket activation, in