This page documents a general plan for the year 2021.

A first this year, we did a survey at the end of the year 2020 to help us identify critical services and pain points so that we can focus our work in the coming year.

- Overall goals

- Quarterly breakdown

- 2020 roadmap evaluation

- Survey results

Overall goals

Those goals are based on the user survey performed in December 2020 and are going to be discussed in the TPA team in January 2021. This was formally adopted as a guide for TPA in the 2021-01-26 meeting.

As a reminder, the priority suggested by the survey is "service stabilisation" before "new services". Furthermore, some services are way more popular than others, so those services should get special attention. In general, the over-arching goals are therefore:

- stabilisation (particularly email but also GitLab, Schleuder, blog, service retirements)

- better communication (particularly with developers)

Must have

-

email delivery improvements: generally postponed to 2022, and needs better architecture. some work was still done.- handle bounces in CiviCRM (issue 33037)

- systematically followup on and respond to abuse complaints (https://gitlab.torproject.org/tpo/tpa/team/-/issues/40168)

-

diagnose

and resolvedelivery issues (e.g. Yahoo, state.gov, Gmail, Gmail again) - provide reliable delivery for users ("my email ends up in spam!"), possibly by following newer standards like SPF, DKIM, DMARC... (issue 40363)

-

possible implementations:

- setup a new MX server to receive incoming email, with "real" (Let's encrypt) TLS certificates, routing to "legacy" (eugeni) mail server

- setup submit-01 to deliver people's emails (issue 30608)

- split mailing lists out of eugeni (build a new mailman 3 mail server?)

- split schleuder out of eugeni (or retire?) (issue)

- stop using eugeni as a smart host (each host sends its own email, particularly RT and CiviCRM)

- retire eugeni (if there is really nothing else left on it)

-

retire old services:

- SVN (issue 17202)

- fpcentral (issue 40009)

-

scale GitLab with ongoing and surely expanding usage

- possibly split in multiple server (#40479)

- throw more hardware at it: resized VM twice

- monitoring? we should monitor the runners, as they have Prometheus exporters

-

provide reliable and simple continuous integration services

- retire Jenkins (https://gitlab.torproject.org/tpo/tpa/team/-/issues/40218)

-

replace with GitLab CI, with Windows, Mac and Linux runnersdelegated to the network team (yay! self-managed runners!) - deployed more runners, some with very specific docker configurations

-

fix the blog formatting and comment moderation, possible solutions:

- migrate to a static website and Discourse https://gitlab.torproject.org/tpo/tpa/team/-/issues/40183 https://gitlab.torproject.org/tpo/tpa/team/-/issues/40297

-

improve communications and monitoring:

- document "downtimes of 1 hour or longer", in a status page issue 40138

-

reduce alert fatigue in NagiosNagios is going to require a redesign in 2022, even if just for upgrading it, because it is a breaking upgrade. maybe rebuild a new server with puppet or consider replacing with Prometheus + alert manager - publicize debugging tools (Grafana, user-level logging in systemd services)

- encourage communication and ticket creation

- move root@ and tpa "noise" to RT (ticket 31242),

- make a real mailing list for admins so that gaba and non-tech can join (ticket)

-

be realistic:

- cover for the day-to-day routine tasks

- reserve time for the unexpected (e.g. GitLab CI migration, should schedule team work)

- reduce expectations

- on budget: hosting expenses shouldn't rise outside of budget (January 2020: 1050EUR/mth, January 2021: 1150EUR/mth, January 2022: 1470EUR/mth, ~100EUR rise approved, rest is DDOS, IPv4 billing change)

Nice to have

-

improve sysadmin code base

- implement an ENC for Puppet (issue 40358)

- avoid YOLO commits in Puppet (possibly: server-side linting, CI)

- publish our Puppet repository (ticket 29387)

- reduce dependency on Python 2 code (see short term LDAP plan)

- reduce dependency on LDAP (move hosts to Puppet? see mid term LDAP plan)

-

avoid duplicate git hosting infrastructure

- retire gitolite, gitweb (issue 36)

-

retire more old services:

- testnet? talk to network team

- gitolite (replaced with GitLab, see above)

- gitweb (replaced with GitLab, see above)

- provide secure, end-to-end authentication of Tor source code (issue 81)

- finish retiring old hardware (moly, ticket 29974)

- varnish to nginx conversion (#32462)

- GitLab pages hosting (see issue tpo/tpa/gitlab#91)

- experiment with containers/kubernetes for CI/CD

- upgrade to bullseye - a few done, 12 out of 90!

- cover for some metrics services (issue 40125)

- help other teams integrate their monitoring with Prometheus/Grafana (e.g. Matrix alerts, tpo/tpa/team#40089, tpo/tpa/team#40080, tpo/tpa/team#31159)

Non-goals

- complete email service: not enough time / budget (or delegate + pay Riseup)

- "provide development/experimental VMs": would be possible through GitLab CD, to be investigated once we have GitLab CI solidly running

- "improve interaction between TPA and developers when new services are setup": see "improve communications" above, and "experimental VMs". The endgame here is people will be able to deploy their own services through Docker, but this will likely not happen in 2021

- static mirror network retirement / re-architecture: we want to test out GitLab pages first and see if it can provide a decent alternative (update: some analysis performed in the static site documentation)

- web development stuff: goals like "finish main website transition", "broken links on website"... should be covered in the web team, but the capacity of TPA is affected by hiro working on the web stuff

- are service admins still a thing? should we cover for things like the metrics team? update: discussion postponed

- complete puppetization: old legacy services are not in Puppet. that is fine: we keep maintaining them by hand when relevant, but new services should all be built in Puppet

- replace Nagios with Prometheus: not a short term goal, no clear benefit. reduce the noise in Nagios instead

- solr/search.tpo deployment (#33106), postponed to 2022

- web metrics (#32996), postponed to 2022

Quarterly breakdown

Q1

First quarter of 2021 is fairly immediate, short term work, as far as this roadmap is concerned. It should include items we are fairly certain to be able to complete within the next few months or so. Postponing those could cause problems.

-

email delivery improvements:

- handle bounces in CiviCRM (issue 33037)

- followup on abuse complaints (https://gitlab.torproject.org/tpo/tpa/team/-/issues/40168) - we do a systematic check of incoming bounces and actively remove people from the CiviCRM newsletter or mailing lists when we receive complaints

-

diagnose

and resolvedelivery issue (e.g. yahoo delivery problems, https://gitlab.torproject.org/tpo/tpa/team/-/issues/40168) problems seem to be due to the lack of SPF and DMARC records, which we can't add until we setup submit-01. also, we need real certs for accepting mails over TLS for some servers, so we should setup an MX that supports that

- GitLab CI deployment (issue 40145)

- Jenkins retirement plan (https://gitlab.torproject.org/tpo/tpa/team/-/issues/40167)

- setup a long-term/sponsored discourse instance?

- document "downtimes of 1 hour or longer", in a status page issue 40138

Q2

Second quarter is a little more vague, but should still be "plannable". Those are goals that are less critical and can afford to wait a little longer or that are part of longer projects that will take longer to complete.

-

retire old services:postponed-

SVN (issue 17202)postponed to Q4/2022 - fpcentral retirement plan (issue 40009)

-

establish plan for gitolite/gitweb retirement (issue 36)postponed to Q4

-

-

improve sysadmin code basepostponed to 2022 or drive-by fixes -

scale/split gitlab?seems to be working fine and we setup new builders already - onion v3 support for TPA services (https://gitlab.torproject.org/tpo/tpa/team/-/issues/32824)

Update: many of those tasks were not done because of lack of staff due to an unplanned leave.

Q3

From our experience, after three quarters, things get difficult to predict reliably. Last year, the workforce was cut by a third some time before this time, which totally changed basic assumptions about worker availability and priorities.

Also, a global pandemic basically tore the world apart, throwing everything in the air, so obviously plans kind of went out the window. Hopefully this won't happen again and the pandemic will somewhat subside, but we should plan for the worst.

- establish solid blog migration plan, see blog service and https://gitlab.torproject.org/tpo/tpa/team/-/issues/40183 tpo/tpa/team#40297

- vacations

- onboarding new staff

Update: this quarter and the previous one, as expected, has changed radically from what was planned, because of the staff changes. Focus will be on training and onboarding, and a well-deserved vacation.

Q4

Obviously, the fourth quarter is sheer crystal balling at this stage, but it should still be an interesting exercise to perform.

- blog retirement before Drupal 8 EOL (November 2021)

- migrate to a static website and Discourse https://gitlab.torproject.org/tpo/tpa/team/-/issues/40183 https://gitlab.torproject.org/tpo/tpa/team/-/issues/40297

-

gitolite/gitweb retirement plan (issue 36)postponed to 2022 - jenkins retirement

- SVN retirement plan (issue 17202)

- fpcentral retirement (issue 40009)

-

redo the user survey and 2022 roadmapabandoned (https://gitlab.torproject.org/tpo/tpa/team/-/issues/40307) -

BTCpayserver hosting (https://gitlab.torproject.org/tpo/tpa/team/-/issues/33750)pay for BTCpayserver hosting (tpo/tpa/team#40303) - move root@ and tpa "noise" to RT (tpo/tpa/team#31242), make a real mailing list for admins so that gaba and non-tech can join

- setup submit-01 to deliver people's emails (tpo/tpa/team#30608)

-

donate website React.js vanilla JS rewritepostponed to 2022, but postponed (tpo/web/donate-static#45) - rewrite bridges.torproject.org templates as part of Sponsor 30's project (https://gitlab.torproject.org/tpo/anti-censorship/bridgedb/-/issues/34322)

2020 roadmap evaluation

The following is a review of the 2020 roadmap.

Must have

- retiring old machines (moly in particular)

- move critical services in ganeti

- buster upgrades before LTS

- within budget: Hetzner invoices went from ~1050EUR/mth on January 2019 to 1200EUR/mth on January 2020, so more or less on track

Comments:

- critical services were swiftly moved into Ganeti

- moly has not been retired, but it is redundant so less of a concern

- a lot of the buster upgrades work was done by a volunteer (thanks @weasel!)

- the budget was slashed by half, but was still mostly respected

Nice to have

-

new mail service - conversion of the kvm* fleet to ganeti for higher reliability and availability

-

buster upgrade completion before anarcat vacation

Comments:

- the new mail service was postponed indefinitely due to workforce reduction, it was seen as a lesser priority project than stabilising the hardware layer

- buster upgrades were a bit later than expected, but still within the expected timeframe

- most of the KVM fleet was migrated (apart from moly) so that's still considered to be a success

Non-goal

- service admin roadmapping?

-

kubernetes cluster deployment?

Comments:

- we ended up doing a lot more service admin work than we usually do, or at least that we say we do, or at least that we say we want to do

- it might be useful to include service admin roadmapping in this work in order to predict important deployments in 2021: the GitLab migration, for example, took a long time and was underestimated

Missed goals

The following goals, set in the monthly roadmap, were not completed:

- moly retirement

- solr/search.tpo deployment

- SVN retirement

- web metrics (#32996)

- varnish to nginx conversion (#32462)

- submit service (#30608)

2021 preview

Those are the ideas that were brought up in 2020 for 2021:

Objectives

-

complete puppetization - complete Puppetization does not seem like a priority at this point. We would prefer to improve the CI/CD story of Puppet instead

-

experiment with containers/kubernetes? - not a priority, but could be a tool for GitLab CI

-

close and merge more services - still a goal

-

replace nagios with prometheus?- not a short term goal -

new hire?- definitely not a possibility in the short term, although we have been brought back full time

Monhtly goals

- january: roadmap approval - still planned

- march/april: anarcat vacation - up in the air

Survey results

This roadmap benefits from a user survey sent to tor-internal@ in

December. This section discusses the results of that survey and tries

to draw general (qualitative) conclusions from that (quantitative)

data.

This was done in issue 40061, and data analysis in issue 40106.

Respondents information

- 26 responses: 12 full, 14 partial

- all paid workers: 9 out of 10 respondents were paid by TPI, the other was paid by another entity to work on Tor

- roles: of the 16 people that filled the "who are you section":

- programmers: 9 (75%)

- management: 4 (33%) included a free-formed "operations" here, which should probably be used in the next survey)

- documentation: 1 (8%)

- community: 1 (8%)

- "yes": 1 (as in: "yes I participate")

- (and yes, those add up to more than 100%, obviously, there is some overlap, but we can note that sysadmins did not respond to their own survey)

The survey should be assumed to represent mostly TPI employees, and not the larger tor-internal or Tor-big-t community.

General happiness

No one is sad with us! People are either happy (15, 58% of total, 83% responding), exuberant (3, 12%, 17% responding), or didn't answer.

Of those 18 people, 10 said the situation has improved in the last year (56%) as well.

General prioritization

The priority for 2021 should be, according to the 12 people who answered:

- Stability: 6 (50%)

- New services: 3 (25%)

- Remove cruft: 1 (8%)

- "Making the interaction between TPA/dev smoother when new services are set up": 1 (8%)

- No answer: 1 (8%)

Services to add or retire

People identified the following services as missing:

- Discord

- a full email stack, or at least outbound email

- discourse

- development/experimental VMs

- a "proper blog platform"

- "Continued enhancements to gitlab-lobby"

The following services had votes for retirement:

- git-rw (4, 33%)

- gitweb (4, 33%)

- SVN (3, 25%)

- blog (2, 17%)

- jenkins (2, 17%)

- fpcentral (1, 8%)

- schleuder (1, 8%)

- testnet (1, 8%)

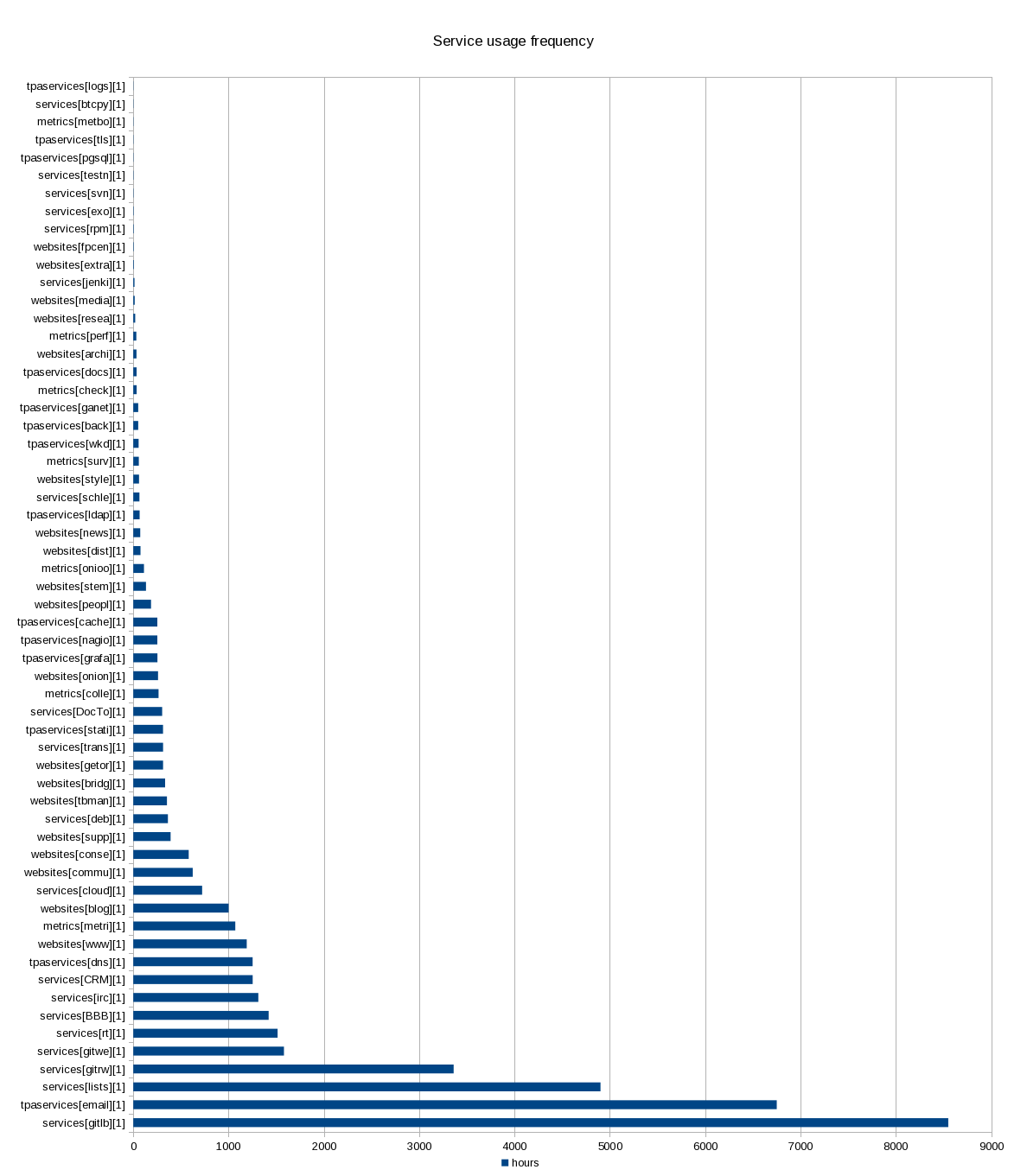

Graphs

Those graphs were built from the results of the gigantic "service usage details" group, from the spreadsheet which will also provide more detailed information, a summary and detailed narrative of which is provided below.

Usage

The X axis is not very clear, but it's the cumulative estimate of the number of hours a service is used in the last year, with 11 respondents. From there we can draw the following guesses of how often a service is used on average:

- 20 hours: yearly (about 2 hours per person per year)

- 100 hours: monthly (less than 1 hours per person per month)

- 500 hours: weekly (about 1 hour per person per week)

- 2500 hours: daily (assuming about 250 work days, 1 hour per person per day)

- 10000 hours: hourly (assuming about 4 hours of solid work per work day available)

Based on those metrics, here are some highlights of this graph:

- GitLab is used almost hourly (8550 hours, N=11, about 3 hours per business day on average)

- Email and lists are next, say about 1-2 hours a day on average

- Git is used about daily (through either Gitolite or Gitweb)

- other services are used "more than weekly", but not quite daily:

- RT

- Big Blue Button

- IRC

- CiviCRM

- DNS is, strangely, considered to be used "weekly", but that question was obviously not clear enough

- many websites sit in the "weekly" range

- a majority of services are used more than monthly ($

X > 100$) on average - there's a long tail of services that are not used often: 27

services are used less than monthly ($

X \le 100$), namely:- onionperf

- archive.tpo

- the TPA documentation wiki (!)

- check.tpo

- WKD

- survey.tpo

- style.tpo

- schleuder

- LDAP

- newsletter.tpo

- dist.tpo

- ... and 13 services are used less than yearly! ($

X \le 20$), namely:- bitcoin payment system

- metrics bot

- test net

- SVN

- exonerator

- rpm archive

- fpcentral

- extra.tpo

- jenkins

- media.tpo

- some TPA services are marked as not frequently used, but that is

probably due to a misunderstanding, as they are hidden or not

directly accessible:

- centralized logging system (although with no sysadmin responding, that's expected, since they're the only ones with access)

- TLS (which is used to serve all websites and secure more internal connections, like email)

- PostgreSQL (database which backs many services)

- Ganeti (virtualization layer on which almost all our services run)

- Backups (I guess low usage is a good sign?)

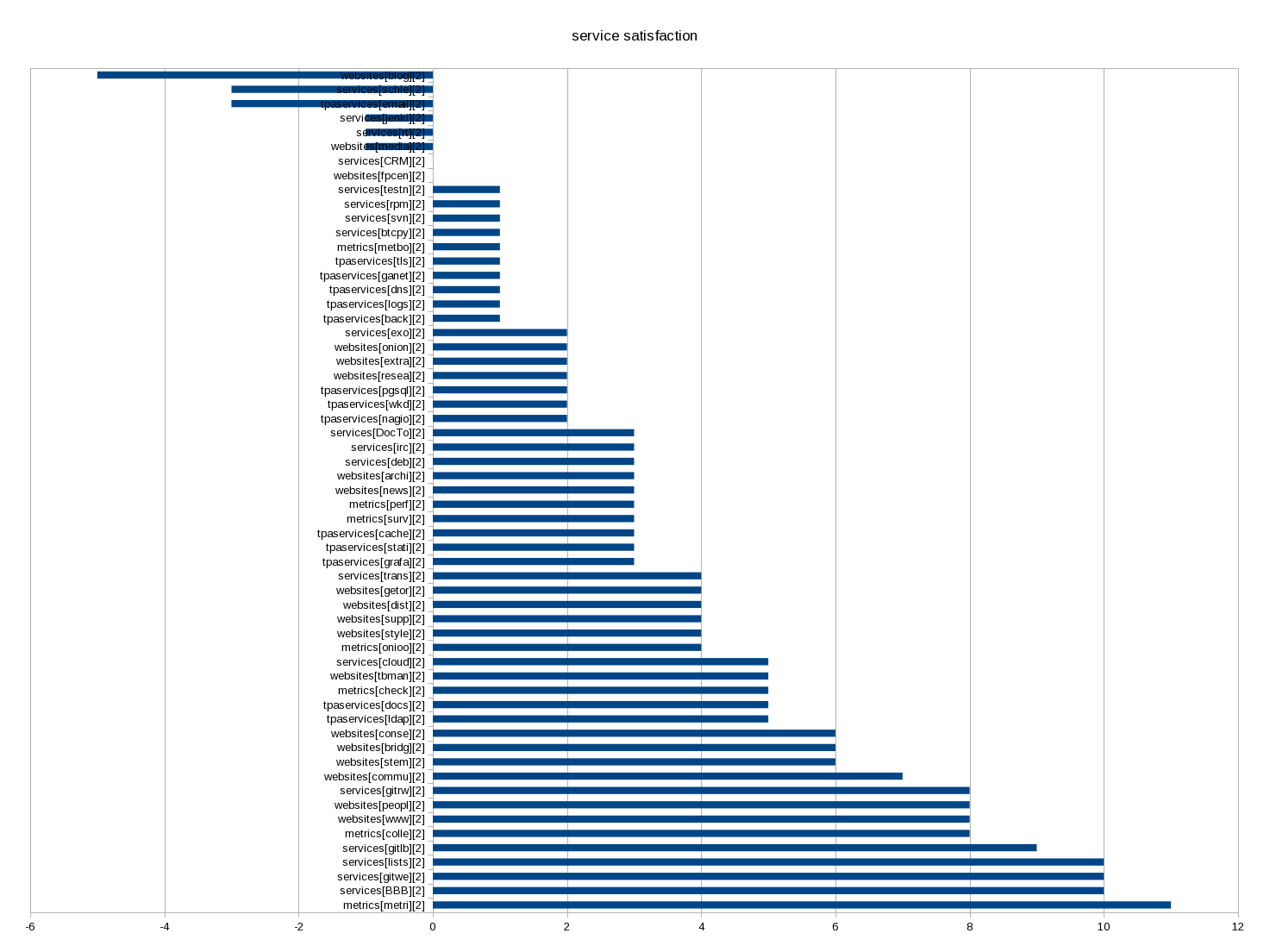

Happiness

The six "unhappy" or "sad" services on top are:

- blog; -5 = 3 happy minus 8 sad

- schleuder; -3 = just 3 sad

- email; -3 = 2 - 5

- jenkins: -1 = just 1 sad

- RT: -1 = 2 - 3

- media.tpo: -1 = 1 - 2

But those are just the services with a negative "happiness" score. There are other services with "sad" votes:

- CRM: 0 = 1 - 1

- fpcentral: 0 = 1 - 1

- backups (?): +1 = 2 - 1

- onion.tpo: +2 = 4 - 2

- research: +2 = 3 -1

- irc: +3 = 4 - 1

- deb.tpo: +3 = 4 - 1

- support.tpo: +4 = 5 - 1

- nextcloud: +5 = 7 - 2

- tbmanual: +5 = 6 - 1

- the main website: +8 = 9 - 1

- gitlab: +9 = 10 - 1

Summary of service usage details

This is a summary of the section below, detailing which services have been reviewed in details.

Actionable items

Those are suggestions that could be done in 2021:

- GitLab is a success, people want it expanded to replace git-rw/gitweb (Git hosting) and Jenkins (CI)

- email is a major problem: people want a Gmail replacement, or at least a way to deliver email without being treated as spam

- CiviCRM is a problem: it needs to handle bounces and we have frustrations with our consultants here

- the main website is a success, but there are concerns it still links to the old website

- some people would like to use the IRC bouncer but don't know how

- the blog is a problem: formatting issues and moderation cause significant pain, people suggest migrating to Discourse and a static blog

- people want a v3 onion.tpo which is planned already

In general, a lot of the problems related to email would benefit from splitting the email services into multiple servers, something that was previously discussed but should be prioritized in this year's roadmap. In general, it seems the delivery service should be put back on the roadmap this year as well.

Unactionable items

Those do not have a clear path to resolution:

- RT receives a lot of spam and makes people unhappy

- schleuder is a problem: tedious to use, unreliable, not sure what the solution is, although maybe splitting the service to a different machine could help

- people are extremely happy with metrics.tpo, and happy with Big Blue Button

- NextCloud is a success, but the collaborative edition is not working for key people who stay on other (proprietary/commercial) services for collaboration. unclear what the solution is here.

Service usage details and happiness

This section drills down into each critical service. A critical service here is one that either:

- has at least one sad vote

- has a comment

- is used more than "monthly" on average

We have a lot of services: it's basically impossible to process all of those in a reasonable time frame, and might not give us a lot more information anyways, as far as this roadmap is concerned.

GitLab

GitLab is a huge accomplishment. It's the most used service, which is exceptional considering it has been deployed only in the last few months. Out of 11 respondents, everyone uses it at least weekly, and most (6), hourly. So it has already become a critical service!

Yet people are extremely happy with it. Out of those 11 people, everyone but a single soul has said they were happy with it which gives it one of the best happiness score of all services (rank #5)!

Most comments about GitLab were basically asking to move more stuff to it (git-rw/gitweb and Jenkins, namely), someone even suggesting we "force people to migrate to GitLab". In particular, it seems we should look at retiring Jenkins in 2021: only one user (monthly), and an unhappy comment suggesting to migrate...

The one critic about the service is "too much URL nesting" and that it is hard to find things, since they do not map to the git-rw project hierarchy.

So GitLab is a win. We need to make sure it keeps running and probably expand it in 2021.

It should be noted, however, that Gitweb and Gitolite (git-rw), as a service, are one of the most frequently used (4th and 5th place, respectively) and one that makes people happy (10/10, 3rd place and 8/8, 9th place) so if/when we replace those service, we should be very careful that the web interface remains useful. One comment that may summarize the situation is:

Happy with gitolite and gitweb, but hope they will also be migrated to gitlab.

Email and lists

Email services are pretty popular: email and lists come second and third, right after GitLab! People are unanimously happy with the mailing lists service (which may be surprising), but the happiness degrades severely when we talk about "email" in general. Most people (5 out 7 respondants) are "sad" about the email service.

Comments about email are:

- "I don’t know enough to get away from Gmail"

- "Majority of my emails sent from my @tpo ends up in SPAM"

- "would like to have outgoing DKIM email someday"

So "fixing email" should probably be the top priority for 2021. In particular, we should be better at not ending up in spam filters (which is hard), provide an alternative to Gmail (maybe less hard), or at least document alternatives to Gmail (not hard).

RT

While we're talking about email, let's talk about Request Tracker, a lesser-known service (only 4 people use it, and 4 declared never using it), yet intensively used by those people (one person uses it hourly!), so it deserves special attention. Most of its users (3 out of 5) are unhappy with it. The concerns are:

- "Some automated ticket handling or some other way to manage the high level of bounce emails / tickets that go to donations@ would make my sadness go away"

- "Spam": presumably receiving too much spam in the queues

CiviCRM

Let's jump the queue a little (we'll come back to BBB and IRC below) and talk about the 9th most used service: CiviCRM. This is one of those services that is used by few of our staff, but done so intensively (one person uses it hourly). And considering how important its service is (donations!), it probably deserves to be higher priority. 2 people responded on the happiness scale, strangely, one happy and one unhappy.

A good summary of the situation is:

The situation with Civi, and our donate.tpo portal, is a grand source of sadness for me (and honestly, our donors), but I think this issue lies more with the fact that the control of this system and architecture has largely been with Giant Rabbit and it’s been like pulling teeth to make changes. Civi is a fairly powerful tool that has a lot of potential, and I think moving away from GR control will make a big difference.

Generally, it seems the spam, bounce handling and email delivery issues mentioned in the email section apply here as well. Migrating CiviCRM to start handling bounces and deliver its own emails will help delivery for other services, reduce abuse complaints, make CiviCRM work better, and generally improve everyone's life so it should definitely be prioritized.

Big Blue Button

One of those intensively used service by many people (rank #7): 10 people use it, 2 monthly, 3 weekly and 5 daily! It's also one of those most "happy" services: 10 people responded they were happy with the service, which makes it the second-most happy service!

No negative comments, great idea, great new deployment (by a third party, mind you), nothing to fix here, it seems.

IRC

The next service in popularity is IRC (rank #8), used by 3 people (hourly, weekly and monthly, somewhat strangely). The main comment was about the lack of usability:

IRC Bouncer: I’d like to use it! I don’t know how to get started, and I am sure there is documentation somewhere, but I just haven’t made time for it and now it’s two years+ in my Tor time and I haven’t done it yet.

I'll probably just connect that person with the IRC bouncer maintainer and pretend there is nothing else to fix here. I honestly expected someone to request us to setup Matrix server (and someone did suggest setting up a "Discord" server, so that might be it), but it didn't get explicitly mentioned, so not a priority, even if it's heavily used.

Main website

The new website is a great success. It's the 7th most used service according to our metrics, and also one that makes people the happiest (7th place).

The single negative comment on the website was "transition still not complete: links to old site still prominent (e.g. Documentation at the top)".

Maybe we should make sure more resources are transitioned to the new website (or elsewhere) in 2021.

Metrics

The metrics.torproject.org site is the service that makes people the happiest, in all the services surveyed. Of the 11 people that answered, all of them were happy with it. It's one of the most used services all around, at place #4.

Blog

People are pretty frustrated by the blog. of all people that answered the "happiness" question, all said they were "sad" about the service. in the free-form, comments mentioned:

- "comment formatting still not fixed", "never renders properly"

- [needs something to] produce link previews (in a privacy preserving way)

- "The comment situation is totally unsustainable but I feel like that’s a community decision vs. sysadmin thing", "comments are awful", "Comments can get out of hand and it's difficult to have productive conversations there"

- "not intuitive, difficult to follow"

- "difficult to find past blog posts[...]: no [faceted search or sort by date vs relevance]"

A positive comment:

- I like Drupal and it’s easy to use for me

A good summary has been provided: "Drupal: everyone is unhappy with the solution right now: hard to do moderation, etc. Static blog + Discourse would be better."

I outline the blog first because it's one of the most frequently used service, yet it's one of the "saddest", so it should probably be made a priority in 2021.

NextCloud

People are generally (77% of 9 respondents) happy with this popular service (rank 14, used by 9 people, 1 yearly, 2 monthly, 4 weekly, 2 daily).

Pain points:

-

discovery problems:

Discovering what documents there are is not easy; I wish I had a view of some kind of global directory structure. I can follow links onto nextcloud, but I never ever browse to see what's there, or find anything there on my own.

-

shared documents are too unreliable:

I want to love NextCloud because I understand the many benefits, but oh boy, it’s a problem for me, particularly in shared documents. I constantly lose edits, so I do not and cannot rely on NextCloud to write anything more serious than meeting notes. Shared documents take 3-5 minutes to load over Tor, and 2+ minutes to load outside of Tor. The flow is so clunky that I just can’t use it regularly other than for document storage.

I've ran into sync issues with a lot of users using the same pad at once. These forced us to not use nextcloud for collab in my team except when really necessary.

So overall NextCloud is heavily used, but has serious reliability problems that keep it from correctly replacing Google Docs for collaboration. It is unclear which way forward we can take here without getting involved into hosting the service or upstream development, neither of which are likely to be an option for 2021.

onion.tpo

Somewhat averagely popular service (rank 26), mentioned here because two people were unhappy with it as it "seems not maintained" and "would love to have v3 onions, I know the reason we don't have yet, but still, this should be a priority".

And thankfully, the latter is a priority that was originally aimed at 2020, but should be delivered in 2021 for sure. Unclear what to do about that other concern.

Schleuder

3 people responded on the happiness scale, and all were sad. Those three (presumably) use the service yearly, monthly and weekly, respectively, so it's not as important (27th service in popularity) as the blog (3rd service!), yet I mention it here because of the severity of the unhappiness.

Comments were:

- "breaks regularly and tedious to update keys, add or remove people"

- "GPG is awful and I wish we could get rid of it"

- "tracking who has responded and who hasn't (and how to respond!) is nontrivial"

- "applies encryption to unencrypted messages, which have already gone over the wire in the clear. This results in a huge amount of spam in my inbox"

In general, considering no one is happy with the service, we should consider looking for alternatives, plain retirement, or really fixing those issues. Maybe making it part of a "big email split" where the service runs on a different server (with service admins having more access) would help?

Ignored services

I stopped looking at services below the 500 hours threshold or so (technically: after the first 20 services, which puts the mark at 350 hours). I made an exception for any service with a "sad" comment.

So those services were above the defined thresholds but were ignored above.

- DNS: one person uses it "hourly", and is "happy", nothing to changes

- Community portal: largely used, users happy, no change suggested

- consensus-health: same

- support portal and tb manual: generally happy, well used, except "FAQ answers don't go into why enough and only regurgitate the surface-level advice. Moar links to support claims made" - should be communicated to the support team

- debian package repository: "debian package not usable", otherwise people are happy

- someone was unhappy about backups, but did not seem to state why

- research: very little use, comment: "whenever I need to upload something to research.tpo, it seems like I need to investigate how to do so all over again. This is probably my fault for not remembering? "

- media: people are unhappy about it: "it would be nice to have something better than what we have now, which is an old archive" and "unmaintained", but it's unclear how to move forward on this from TPA's perspective

- fpcentral: one yearly user, one unhappy person suggested to retire it, which is already planned (https://gitlab.torproject.org/tpo/tpa/team/-/issues/40009)

Every other service not mentioned here should consider itself "happy". In particular, people are generally happy with websites, TPA and metrics services overall, so congratulations to every sysadmin and service admin out there and thanks for your feedback for those who filled in the survey!

Notes for the next survey

- average time: 16 minutes (median: 14 min). much longer than the estimated 5-10 minutes.

- unsurprisingly, the biggest time drain was the service group,

taking between 10 and 20 minutes

- maybe remove or merge some services next time?

- remove the "never" option for the service? same as not answering...

- the service group responses are hard to parse - each option ends up being a separate question and required a lot more processing than can just be done directly in Limesurvey

- worse: the data is mangled up together: the "happiness" and "frequency" data is interleaved which required some annoying data massaging after - might be better to split those in two next time?

- consider an automated Python script to extract the data from the survey next time? processing took about 8 hours this time around, consider xkcd 1205 of course

- everyone who answered that question (8 out of 12, 67%) agreed to do the survey again next year

Obviously, at least one person correctly identified that the "survey could use some work to make it less overwhelming." Unfortunately, no concrete suggestion on how to do so was provided.

How the survey data was processed

Most of the questions were analyzed directly in Limesurvey by:

- visiting the admin page, then responses and statistics, then the statistics page

- in the stats page, check the following:

- Data selection: Include "all responses"

- Output options:

- Show graphs

- Graph labels: Both

- In the "Response filters", pick everything but the "Services satisfaction and usage" group

- click "View statistics" on top

Then we went through the results and described those manually here. We could also have exported a PDF but it seemed better to have a narrative.

The "Services satisfaction and usage" group required more work. On top of the above "statistics" page (just select that group, and group in one column for easier display), which is important to verify things (and have access to the critical comments section!), the data was exported as CSV with the following procedure:

- in responses and statistics again, pick Export -> Export responses

- check the following:

- Headings:

- Export questions as: Question code

- Responses:

- Export answers as: Answer codes

- Columns:

- Select columns: use shift-click to select the right question set

- Headings:

- then click "export"

The resulting CSV file was imported in a LibreOffice spreadsheet and mangled with a bunch of formulas and graphs. Originally, I used this logic:

- for the happy/sad questions, I assigned one point to "Happy" answers and -1 points to "Sad" answers.

- for the usage, I followed the question codes:

- A1: never

- A2: Yearly

- A3: Monthly

- A4: Weekly

- A5: Daily

- A6: Hourly

For usage the idea is that a service still gets a point if someone answered "never" instead of just skipping it. It shows acknowledgement of the service's existence, in some way, and is better than not answering at all, but not as good as "once a year", obviously.

I changed the way values are computed for the frequency scores. The above numbers are quite meaningless: GitLab was at "60" which could mean 10 people using it hourly or 20 people using it weekly, which is a vastly different usage scenario.

Instead, I've come up with a magic formula:

H = 10*5^{(A-3)}

Where $H$ is a number of hours and $A$ is the value of the suffix to the answer code (e.g. $1$ for A1, $2$ for A2, ...).

This gives us the following values, which somewhat fit a number of hours a year for the given frequency:

- A1 ("never"): 0.4

- A2 ("yearly"): 2

- A3 ("monthly"): 10

- A4 ("weekly"): 50

- A5 ("daily"): 250

- A6 ("hourly"): 1250

Obviously, there are more than 250 days and 1250 hours in a year, but if you count for holidays and lost cycles, and squint a little, it kind of works. Also, "Never" should probably be renamed to "rarely" or just removed in the next survey, but it still reflects the original idea of giving credit to the "recognition" of the service.

This gives us a much better approximation of the number of hours-person each service is used per year and therefore which service should be prioritized. I also believe it better reflects actual use: I was surprised to see that gitweb and git-rw are used equally by the team, which the previous calculation told us. The new ones seem to better reflect actual use (3 monthly, 1 weekly, 6 daily vs 1 monthly, 2 weekly, 3 daily, 2 hourly, respectively).