The "static component" or "static mirror" system is a set of servers, scripts and services designed to publish content over the world wide web (HTTP/HTTPS). It is designed to be highly available and distributed, a sort of content distribution network (CDN).

Tutorial

This documentation is about administrating the static site components, from a sysadmin perspective. User documentation lives in doc/static-sites.

How-to

Adding a new component

-

add the component to Puppet, in

modules/staticsync/data/common.yaml:onionperf.torproject.org: master: staticiforme.torproject.org source: staticiforme.torproject.org:/srv/onionperf.torproject.org/htdocs/ -

create the directory on

staticiforme:ssh staticiforme "mkdir -p /srv/onionperf.torproject.org/htdocs/ \ && chown torwww:torwww /srv/onionperf.torproject.org/{,htdocs}" \ && chmod 770 /srv/onionperf.torproject.org/{,htdocs}" -

add the host to DNS, if not already present, see service/dns, for example add this line in

dns/domains/torproject.org:onionperf IN CNAME static -

add an Apache virtual host, by adding a line like this in service/puppet to

modules/roles/templates/static-mirroring/vhost/static-vhosts.erb:vhost(lines, 'onionperf.torproject.org') -

add an SSL service, by adding a line in service/puppet to

modules/roles/manifests/static_mirror_web.pp:ssl::service { onionperf.torproject.org': ensure => 'ifstatic', notify => Exec['service apache2 reload'], key => true, }This also requires generating an X509 certificate, for which we use Let's encrypt. See letsencrypt for details.

-

add an onion service, by adding another

onion::serviceline in service/puppet tomodules/roles/manifests/static_mirror_onion.pp:onion::service { [...] 'onionperf.torproject.org', [...] } -

run Puppet on the master and mirrors:

ssh staticiforme puppet agent -t cumin 'C:roles::static_mirror_web' 'puppet agent -t'The latter is done with cumin, see also service/puppet for a way to do jobs on all hosts.

-

consider creating a new role and group for the component if none match its purpose, see create-a-new-user for details:

ssh alberti.torproject.org ldapvi -ZZ --encoding=ASCII --ldap-conf -H ldap://db.torproject.org -D "uid=$USER,ou=users,dc=torproject,dc=org" -

if you created a new group, you will probably need to modify the

legacy_sudoersfile to grant a user access to the role/group, seemodules/profile/files/sudo/legacy_sudoersin thetor-puppetrepository (and service/puppet to learn about how to make changes to Puppet).onionperfis a good example of how to create asudoersfile. edit the file withvisudoso it checks the syntax:visudo -f modules/profile/files/sudo/legacy_sudoersThis, for example, is the line that was added for

onionperf:%torwww,%metrics STATICMASTER=(mirroradm) NOPASSWD: /usr/local/bin/static-master-update-component onionperf.torproject.org, /usr/local/bin/static-update-component onionperf.torproject.org

Removing a component

This procedure can be followed if we remove a static component. We should, however, generally keep a redirection to another place to avoid breaking links, so the instructions also include notes on how to keep a "vanity site" around.

This procedure is common to all cases:

-

remove the component to Puppet, in

modules/staticsync/data/common.yaml -

remove the Apache virtual host, by removing a line like this in service/puppet to

modules/roles/templates/static-mirroring/vhost/static-vhosts.erb:vhost(lines, 'onionperf.torproject.org') -

remove an SSL service, by removing a line in service/puppet to

modules/roles/manifests/static_mirror_web.pp:ssl::service { onionperf.torproject.org': ensure => 'ifstatic', notify => Exec['service apache2 reload'], key => true, } -

remove onion service, by removing another

onion::serviceline in service/puppet tomodules/roles/manifests/static_mirror_onion.pp:onion::service { [...] 'onionperf.torproject.org', [...] } -

remove the

sudorules for the role user -

If we do want to keep a vanity site for the redirection, we should also do this:

-

add an entry to

roles::static_mirror_web_vanity, in thessl::serviceblock ofmodules/roles/manifests/static_mirror_web_vanity.pp -

add a redirect in the template (

modules/roles/templates/static-mirroring/vhost/vanity-vhosts.erb), for example:Use vanity-host onionperf.torproject.org ^/(.*)$ https://gitlab.torproject.org/tpo/metrics/team/-/wikis/onionperf

-

-

deploy the changes globally, replacing {staticsource} with the components source server hostname, often

staticiformeorstatic-gitlab-shimssh {staticsource} puppet agent -t ssh static-master-fsn puppet agent -t cumin 'C:roles::static_mirror_web or C:roles::static_mirror_web_vanity' 'puppet agent -t' -

remove the home directory specified on the server:

ssh {staticsource} "mv /srv/onionperf.torproject.org/htdocs/ /srv/onionperf.torproject.org/htdocs-OLD ; echo rm -rf /srv/onionperf.torproject.org/htdocs-OLD | at now + 7 days" ssh static-master-fsn "rm -rf /srv/static.torproject.org/master/onionperf.torproject.org*" cumin -o txt 'C:roles::static_mirror_web' 'mv /srv/static.torproject.org/mirrors/onionperf.torproject.org /srv/static.torproject.org/mirrors/onionperf.torproject.org-OLD' cumin -o txt 'C:roles::static_mirror_web' 'echo rm -rf /srv/static.torproject.org/mirrors/onionperf.torproject.org-OLD | at now + 7 days' -

consider removing the role user and group in LDAP, if there are no files left owned by that user

If we do not want to keep a vanity site, we should also do this:

-

remove the host to DNS, if not already present, see service/dns. this can be either in

dns/domains.gitordns/auto-dns.git -

remove the Let's encrypt certificate, see letsencrypt for details

Pager playbook

Out of date mirror

WARNING: this playbook is out of date, as this alert was retired in the Prometheus migration. There's a long-term plan to restore it, but considering those alerts were mostly noise, it has not been prioritized, see tpo/tpa/team#42007.

If you see an error like this in Nagios:

mirror static sync - deb: CRITICAL: 1 mirror(s) not in sync (from oldest to newest): 95.216.163.36

It means that Nagios has checked the given host

(hetzner-hel1-03.torproject.org, in this case) is not in sync for

the deb component, which is https://deb.torproject.org.

In this case, it was because of a prolonged outage on that host, which made it unreachable to the master server (tpo/tpa/team#40432).

The solution is to run a manual sync. This can be done by, for

example, running a deploy job in GitLab (see static-shim) or

running static-update-component by hand, see doc/static-sites.

In this particular case, the solution is simply to run this on the

static source (palmeri at the time of writing):

static-update-component deb.torproject.org

Disaster recovery

TODO: add a disaster recovery.

Restoring a site from backups

The first thing you need to decide is where you want to restore from. Typically you want to restore the site from the source server. If you do not know where the source server is, you can find it in tor-puppet.git, in the modules/staticsync/data/common.yaml.

Then head to the Bacula director to perform the restore:

ssh bacula-director-01

And run the restore procedure. Enter the bacula console:

# bconsole

Then the procedure, in this case we're restoring from static-gitlab-shim:

restore

5 # (restores latest backup from a host)

77 # (picks static-gitlab-shim from the list)

mark /srv/static-gitlab-shim/status.torproject.org

done

yes

Then wait for the backup to complete. You can check the progress by

typing mess to dump all messages (warning: that floods your console)

or status director. When the backup is done, you can type quit.

It will be directly on the host, in /var/tmp/bacula-restores. You

can change that path to restore in-place in the last step, by typing

mod instead of yes. The rest of the guide assumes the restored

files are in /var/tmp/bacula-restores/.

Now go on the source server:

ssh static-gitlab-shim.torproject.org

If you haven't restored in place, you should move the current site aside, if present:

mv /srv/static-gitlab-shim/status.torproject.org /srv/static-gitlab-shim/status.torproject.org.orig

Check the permissions are correct on the restored directory:

ls -l /var/tmp/bacula-restores/srv/static-gitlab-shim/status.torproject.org/ /srv/static-gitlab-shim/status.torproject.org.orig/

Typically, you will want to give the files to the shim:

chown -R static-gitlab-shim:static-gitlab-shim /srv/static-gitlab-shim/status.torproject.org/

Then rsync the site in place:

rsync -a /var/tmp/bacula-restores/srv/static-gitlab-shim/status.torproject.org/ /srv/static-gitlab-shim/status.torproject.org/

We rsync the site in case whatever happened to destroy the site will happen again. This will give us a fresh copy of the backup in /var/tmp.

Once that is completed, you need to trigger a static component update:

static-update-component status.torproject.org

The site is now restored.

Reference

Installation

Servers are mostly configured in Puppet, with some

exceptions. See the design section section below for

details on the Puppet classes in use. Typically, a web mirror will use

roles::static_mirror_web, for example.

Web mirror setup

To setup a web mirror, create a new server with the following entries in LDAP:

allowedGroups: mirroradm

allowedGroups: weblogsync

Then run these commands on the LDAP server:

puppet agent -t

sudo -u sshdist ud-generate

sudo -H ud-replicate

This will ensure the mirroradm user is created on the host.

Then the host needs the following Puppet configuration in Hiera-ENC:

classes:

- roles::static_mirror_web

The following should also be added to the node's Hiera data:

staticsync::static_mirror::get_triggered: false

The get_triggered parameter ensures the host will not block static

site updates while it's doing its first sync.

Then Puppet can be ran on the host, after apache2 is installed to

make sure the apache2 puppet module picks it up:

apt install apache2

puppet agent -t

You might need to reboot to get some firewall rules to load correctly:

reboot

The server should start a sync after reboot. However, it's likely that the SSH keys it uses to sync have not been propagated to the master server. If the sync fails, you might receive an email with lots of lines like:

[MSM] STAGE1-START (2021-03-11 19:38:59+00:00 on web-chi-03.torproject.org)

It might be worth running the sync by hand, with:

screen sudo -u mirroradm static-mirror-run-all

The server may also need to be added to the static component

configuration in modules/staticsync/data/common.yaml, if it is

to carry a full mirror, or exclude some components. For example,

web-fsn-01 and web-chi-03 both carry all components, so they need

to be added to all limit-mirrors statements, like this:

components:

# [...]

dist.torproject.org:

master: static-master-fsn.torproject.org

source: staticiforme.torproject.org:/srv/dist-master.torproject.org/htdocs

limit-mirrors:

- archive-01.torproject.org

- web-cymru-01.torproject.org

- web-fsn-01.torproject.org

- web-fsn-02.torproject.org

- web-chi-03.torproject.org

Once that is changed, make sure to run puppet agent -t on the

relevant static master. After running puppet on the static

master, the static-mirror-run-all command needs to be rerun on the

new mirror (although it will also run on the next reboot).

When the sync is finished, you can remove this line:

staticsync::static_mirror::get_triggered: false

... and the node can be added to the various files in

dns/auto-dns.git.

Then, to be added to Fastly, this was also added to Hiera:

roles::cdn_torproject_org::fastly_backend: true

Once that change is propagated, you need to change the Fastly configuration using the tools in the cdn-config-fastly repository. Note that only one of the nodes is a "backend" for Fastly, and typically not the nodes that are in the main rotation (so that the Fastly frontend survives if the main rotation dies). But the main rotation servers act as a backup for the main backend.

Troubleshooting a new mirror setup

While setting up a new web mirror, you may run into some roadblocks.

- Running

puppet agent -tproduces fails after adding the mirror to puppet:

Error: Cannot create /srv/static.torproject.org/mirrors/blog.staging.torproject.net; parent directory /srv/static.torproject.org/mirrors does not exist

This error happens when running puppet before running an initial sync

on the mirror. Run screen sudo -u mirroradm static-mirror-run-all

and then re-run puppet.

- Running an initial sync on the new mirror fails with this error:

mirroradm@static-master-fsn.torproject.org: Permission denied (publickey).

rsync: connection unexpectedly closed (0 bytes received so far) [Receiver]

rsync error: unexplained error (code 255) at io.c(228) [Receiver=3.2.3]

The mirror's SSH keys haven't been been added to the static master

yet. Run puppet agent -t on the relevant static mirror (in this

case static-master-fsn.torproject.org)

- Running an initial sync fails with this error:

Error: Could not find user mirroradm

Puppet hasn't run on the LDAP server, so ud-replicate wasn't able

to open a connection to the new mirror. Run this command on the

LDAP server, and then try the sync again:

puppet agent -t

sudo -u sshdist ud-generate

sudo -H ud-replicate

SLA

This service is designed to be highly available. All web sites should keep working (maybe with some performance degradation) even if one of the hosts goes down. It should also absorb and tolerate moderate denial of service attacks.

Design

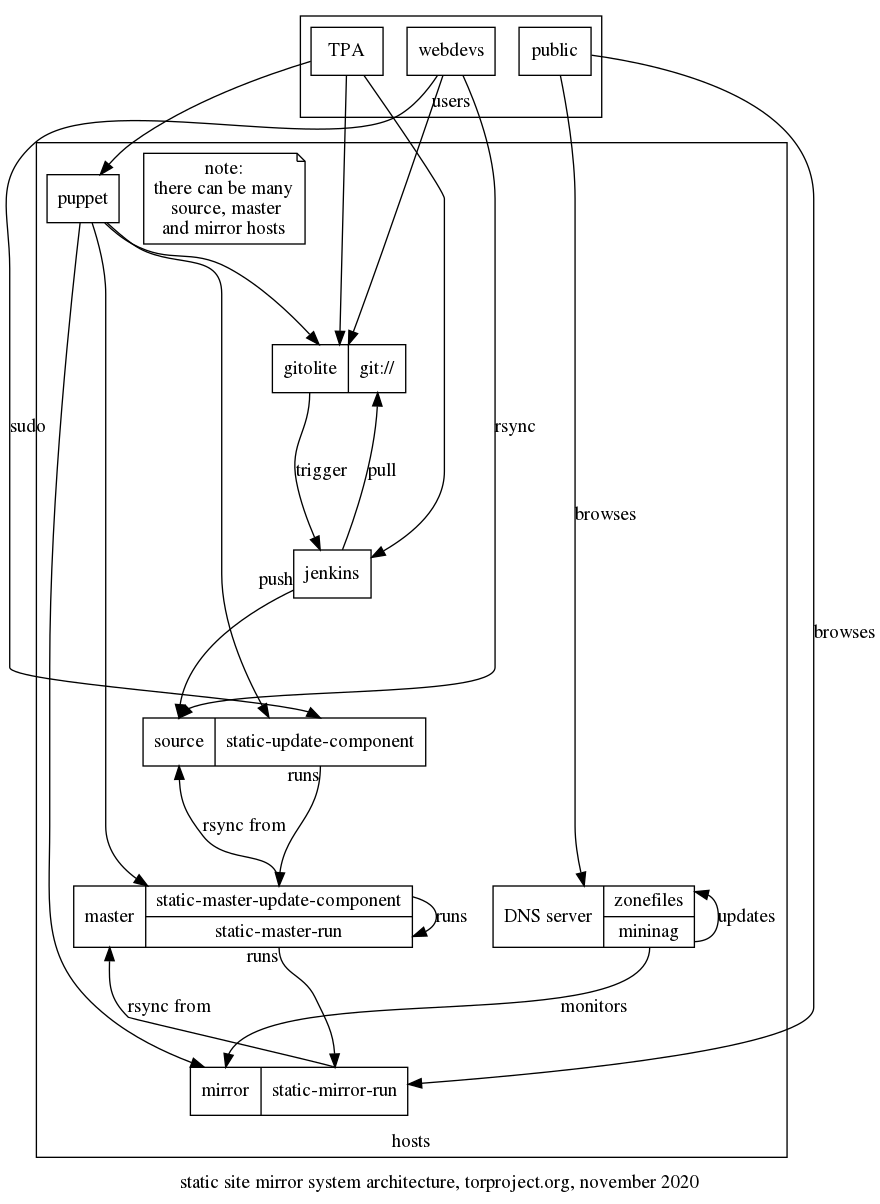

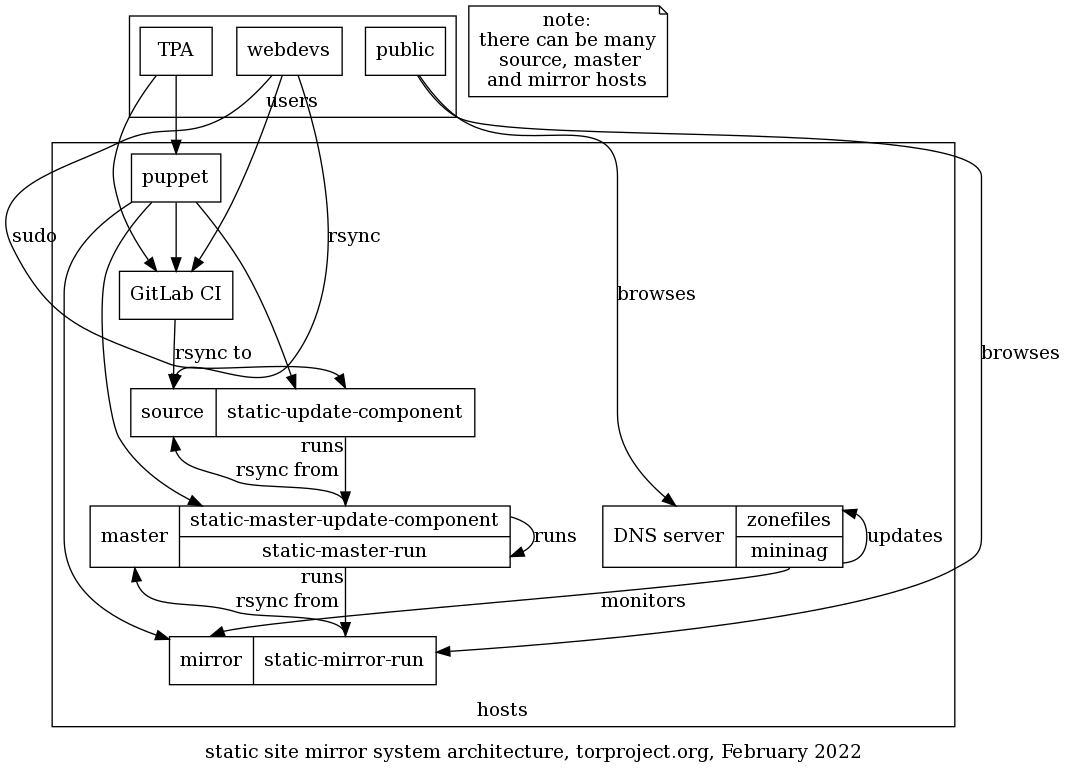

The static mirror system is built of three kinds of hosts:

source- builds and hosts the original content (roles::static_sourcein Puppet)master- receives the contents from the source, dispatches it (atomically) to the mirrors (roles::static_masterin Puppet)mirror- serves the contents to the user (roles::static_mirror_webin Puppet)

Content is split into different "components", which are units of

content that get synchronized atomically across the different

hosts. Those components are defined in a YAML file in the

tor-puppet.git repository

(modules/staticsync/data/common.yaml at the time of writing,

but it might move to Hiera, see issue 30020 and puppet).

The GitLab service is used to maintain source code that is behind some websites in the static mirror system. GitLab CI deploys built sites to a static-shim which ultimately serves as a static source that deploys to the master and mirrors.

This diagram summarizes how those components talk to each other graphically:

A narrative of how changes get propagated through the mirror network is detailed below.

A key advantage of that infrastructure is the higher availability it provides: whereas individual virtual machines are power-cycled for scheduled maintenance (e.g. kernel upgrades), static mirroring machines are removed from the DNS during their maintenance.

Change process

When data changes, the source is responsible for running

static-update-component, which instructs the master via SSH to run

static-master-update-component, transfers a new copy of the source

data to the master using rsync(1) and, upon successful copy, swaps

it with the current copy.

The current copy on the master is then distributed to all actual

mirrors, again placing a new copy alongside their current copy using

rsync(1).

Once the data successfully made it to all mirrors, the mirrors are instructed to swap the new copy with their current copy, at which point the updated data will be served to end users.

Source code inventory

The source code of the static mirror system is spread out in different

files and directories in the tor-puppet.git repository:

modules/staticsync/data/common.yamllists the "components"modules/roles/manifests/holds the different Puppet roles:roles::static_mirror- a generic mirror, seestaticsync::static_mirrorbelowroles::static_mirror_web- a web mirror, including most (but not necessarily all) components defined in the YAML configuration. configures Apache (which the above doesn't). includesroles::static_mirror(and thereforestaticsync::static_mirror)roles::static_mirror_onion- configures the hidden services for the web mirrors defined aboveroles::static_source- a generic static source, seestaticsync::static_source, belowroles::static_master- a generic static master, seestaticsync::static_masterbelow

modules/staticsync/is the core Puppet module holding most of the source code:staticsync::static_source- source, which:- exports the static user SSH key to the master, punching a hole in the firewall

- collects the SSH keys from the master(s)

staticsync::static_mirror- a mirror which does the above and:- deploys the

static-mirror-runandstatic-mirror-run-allscripts (see below) - configures a cron job for

static-mirror-run-all - exports a configuration snippet of

/etc/static-clients.conffor the master

- deploys the

staticsync::static_master- a master which:- deploys the

static-master-runandstatic-master-update-componentscripts (see below) - collects the

static-clients.confconfiguration file, which is the hostname ($::fqdn) of each of thestatic_sync::static_mirrorexports - configures the

basedir(currently/srv/static.torproject.org) anduserhome directory (currently/home/mirroradm) - collects the SSH keys from sources, mirrors and other masters

- exports the SSH key to the mirrors and sources

- deploys the

staticsync::base, included by all of the above, deploys:/etc/static-components.conf: a file derived from themodules/staticsync/data/common.yamlconfiguration file/etc/staticsync.conf: polyglot (bash and Python) configuration file propagating thebase(currently/srv/static.torproject.org,masterbase(currently$base/master) andstaticuser(currentlymirroradm) settingsstaticsync-ssh-wrapandstatic-update-component(see below)

TODO: try to figure out why we have /etc/static-components.conf and

not directly the YAML file shipped to hosts, in

staticsync::base. See the static-components.conf.erb Puppet

template.

NOTE: the modules/staticsync/data/common.yaml was previously known

as modules/roles/misc/static-components.yaml but was migrated into

Hiera as part of tpo/tpa/team#30020.

Scripts walk through

-

static-update-componentis run by the user on the source host.If not run under sudo as the

staticuseralready, itsudo's to thestaticuser, re-executing itself. It then SSH to thestatic-masterfor that component to runstatic-master-update-component.LOCKING: none, but see

static-master-update-component -

static-master-update-componentis run on the master hostIt

rsync's the contents from the source host to the static master, and then triggersstatic-master-runto push the content to the mirrors.The sync happens to a new

<component>-updating.incoming-XXXXXXdirectory. On sync success,<component>is replaced with that new tree, and thestatic-master-runtrigger happens.LOCKING: exclusive locks are held on

<component>.lock -

static-master-runtriggers all the mirrors for a component to initiate syncs.When all mirrors have an up-to-date tree, they are instructed to update the

cursymlink to the new tree.To begin with,

static-master-runcopies<component>to<component>-current-push.This is the tree all the mirrors then sync from. If the push was successful,

<component>-current-pushis renamed to<component>-current-live.LOCKING: exclusive locks are held on

<component>.lock -

static-mirror-runruns on a mirror and syncs components.There is a symlink called

curthat points to eithertree-aortree-bfor each component. thecurtree is the one that is live, the other one usually does not exist, except when a sync is ongoing (or a previous one failed and we keep a partial tree).During a sync, we sync to the

tree-<X>that is not the live one. When instructed bystatic-master-run, we update the symlink and remove the old tree.static-mirror-runrsync's either-current-pushor-current-livefor a component.LOCKING: during all of

static-mirror-run, we keep an exclusive lock on the<component>directory, i.e., the directory that holdstree-[ab]andcur. -

static-mirror-run-allRun

static-mirror-runfor all components on this mirror, fetching the-live-tree.LOCKING: none, but see

static-mirror-run. -

staticsync-ssh-wrapwrapper for ssh job dispatching on source, master, and mirror.

LOCKING: on master, when syncing

-live-trees, a shared lock is held on<component>.lockduring the rsync process.

The scripts are written in bash except static-master-run, written in

Python 2.

Authentication

The authentication between the static site hosts is entirely done through

SSH. The source hosts are accessible by normal users, which can sudo

to a "role" user which has privileges to run the static sync scripts

as sync user. That user then has privileges to contact the master

server which, in turn, can login to the mirrors over SSH as well.

The user's sudo configuration is therefore critical and that

sudoers configuration could also be considered part of the static

mirror system.

The GitLab runners have SSH access to the static-shim service infrastructure, so it can build and push websites, through a private key kept in the project, the public part of which is deployed by Puppet.

Jenkins build jobs

WARNING: Jenkins was retired in late 2021. This documentation is now irrelevant and is kept only for historical purposes. The static-shim with GitLab CI has replaced this.

Jenkins is used to build some websites and push them to the static

mirror infrastructure. The Jenkins jobs get triggered from git-rw

git hooks, and are (partially) defined in jenkins/tools.git and

jenkins/jobs.git. Those are fed into jenkins-job-builder to

build the actual job. Those jobs actually build the site with hugo or

lektor and package an archive that is then fetched by the static

source.

The build scripts are deployed on staticiforme, in the

~torwww home directory. Those get triggered through the

~torwww/bin/ssh-wrap program, hardcoded in

/etc/ssh/userkeys/torwww, which picks the right build job based on

the argument provided by the Jenkins job, for example:

- shell: "cat incoming/output.tar.gz | ssh torwww@staticiforme.torproject.org hugo-website-{site}"

Then the wrapper eventually does something like this to update the static component on the static source:

rsync --delete -v -r "${tmpdir}/incoming/output/." "${basedir}"

static-update-component "$component"

Issues

There is no issue tracker specifically for this project, File or search for issues in the team issue tracker with the ~static-component label.

Monitoring and testing

Static site synchronisation is monitored in Nagios, using a block in

nagios-master.cfg which looks like:

-

name: mirror static sync - extra

check: "dsa_check_staticsync!extra.torproject.org"

hosts: global

servicegroups: mirror

That script (actually called dsa-check-mirrorsync) actually makes an

HTTP request to every mirror and checks the timestamp inside a "trace"

file (.serial) to make sure everyone has the same copy of the site.

There's also a miniature reimplementation of Nagios called mininag which runs on the DNS server. It performs health checks on the mirrors and takes them out of the DNS zonefiles if they become unavailable or have a scheduled reboot. This makes it possible to reboot a server and have the server taken out of rotation automatically.

Logs and metrics

All tor webservers keep a minimal amount of logs. The IP address and

time (but not the date) are clear (00:00:00). The referrer is

disabled on the client side by sending the Referrer-Policy "no-referrer" header.

The IP addresses are replaced with:

0.0.0.0- HTTP request0.0.0.1- HTTPS request0.0.0.2- hidden service request

Logs are kept for two weeks.

Errors may be sent by email.

Metrics are scraped by Prometheus using the "Apache" exporter.

Backups

The source hosts are backed up with Bacula without any special

provision.

TODO: check if master / mirror nodes need to be backup. Probably not?

Other documentation

Discussion

Overview

The goal of this discussion section is to consider improvements to the static site mirror system at torproject.org. It might also apply to debian.org, but the focus is currently on TPO.

The static site mirror system has been designed for hosting Debian.org content. Interestingly, it is not used for the operating system mirrors itself, which are synchronized using another, separate system (archvsync).

The static mirror system was written for Debian.org by Peter Palfrader. It has also been patches by other DSA members (Stephen Gran and Julien Cristau both have more than 100 commits on the old code base).

This service is critical: it distributes the main torproject.org websites, but also software releases like the tor project source code and other websites.

Limitations

The maintenance status of the mirror code is unclear: while it is

still in use at Debian.org, it is made of a few sets of components

which are not bundled in a single package. This makes it hard to

follow "upstream", although, in theory, it should be possible to

follow the dsa-puppet repository. In practice, that's pretty

difficult because the dsa-puppet and tor-puppet repositories have

disconnected histories. Even if they would have a common ancestor, the

code is spread in multiple directories, which makes it hard to

track. There has been some refactoring to move most of the code in a

staticsync module, but we still have files strewn over other

modules.

The static site system has no unit tests, linting, release process, or CI. Code is deployed directly through Puppet, on the live servers.

There hasn't been a security audit of the system, as far as we could tell.

Python 2 porting is probably the most pressing issue in this project:

the static-master-run program is written in old Python 2.4

code. Thankfully it is fairly short and should be easy to port.

The YAML configuration duplicates the YAML parsing and data structures present in Hiera, see issue 30020 and puppet).

Jenkins integration

NOTE: this section is now irrelevant, because Jenkins was retired in favor of the static-shim to GitLab CI. A new site now requires only a change in GitLab and Puppet, successfully reducing this list to 2 services and 2 repositories.

For certain sites, the static site system requires Jenkins to build websites, which further complicates deployments. A static site deployment requiring Jenkins needs updates on 5 different repositories, across 4 different services:

- a new static component in the (private)

tor-puppet.gitrepository - a build script in the jenkins/tools.git repository

- a build job in the jenkins/jobs.git repository

- a new entry in the ssh wrapper in the admin/static-builds.git repository

- a new entry in the

gitolite-admin.gitrepository

Goals

Must have

- high availability: continue serving content even if one (or a few?) servers go down

- atomicity: the deployed content must be coherent

- high performance: should be able to saturate a gigabit link and withstand simple DDOS attacks

Nice to have

- cache-busting: changes to a CSS or JavaScript file must be propagated to the client reasonably quickly

- possibly host Debian and RPM package repositories

Non-Goals

- implement our own global content distribution network

Approvals required

Should be approved by TPA.

Proposed Solution

The static mirror system certainly has its merits: it's flexible, powerful and provides a reasonably easy to deploy, high availability service, at the cost of some level of obscurity, complexity, and high disk space requirements.

Cost

Staff, mostly. We expect a reduction in cost if we reduce the number of copies of the sites we have to keep around.

Alternatives considered

- GitLab pages could be used as a source?

- the cache system could be used as a replacement in the front-end

TODO: benchmark gitlab pages vs (say) apache or nginx.

GitLab pages replacement

It should be possible to replace parts or the entirety of the system progressively, however. A few ideas:

- the mirror hosts could be replaced by the cache system. this would possibly require shifting the web service from the mirror to the master or at least some significant re-architecture

- the source hosts could be replaced by some parts of the GitLab Pages system. unfortunately, that system relies on a custom webserver, but it might be possible to bypass that and directly access the on-disk files provided by the CI.

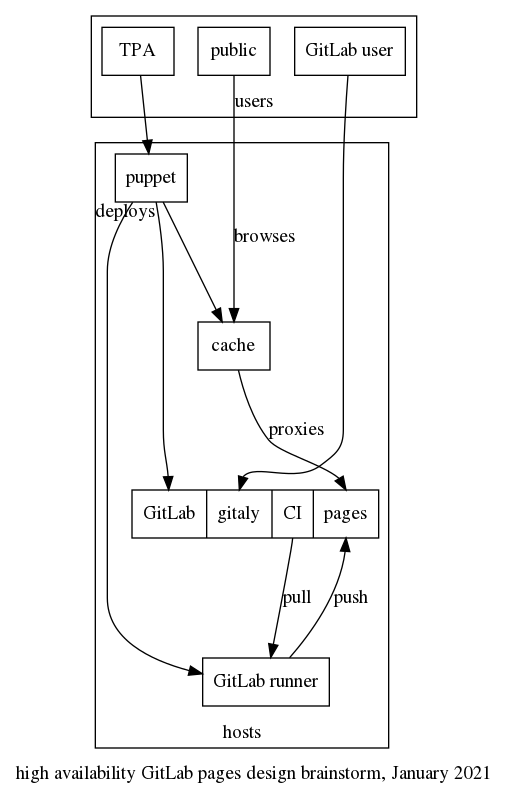

The architecture would look something like this:

Details of the GitLab pages design and installation is available in our GitLab documentation.

Concerns about this approach:

- GitLab pages is a custom webserver which issues TLS certs for the custom domains and serves the content, it's unclear how reliable or performant that server is

- The pages design assumes the existence of a shared filesystem to deploy content, currently NFS, but they are switching to S3 (as explained above), which introduces significant complexity and moves away from the classic "everything is a file" approach

- The new design also introduces a dependency on the main GitLab rails API for availability, which could be a concern, especially since that is usually a "non-free" feature (e.g. PostgreSQL replication and failover, Database load-balancing, traffic load balancer, Geo disaster recovery and, generally, all of Geo and most availability components are non-free).

- In general, this increases dependency on GitLab for deployments

Next steps (OBSOLETE, see next section):

- check if the GitLab Pages subsystem provides atomic updates

- see how GitLab Pages can be distributed to multiple hosts and how scalable it actually is or if we'll need to run the cache frontend in front of it. update: it can, but with significant caveats in terms of complexity, see above

- setup GitLab pages to test with small, non-critical websites (e.g. API documentation, etc)

- test the GitLab pages API-based configuration and see how it handles outages of the main rails API

- test the object storage system and see if it is usable, debuggable, highly available and performant enough for our needs

- keep track of upstream development of the GitLab pages architecture, see this comment from anarcat outlining some of those concerns

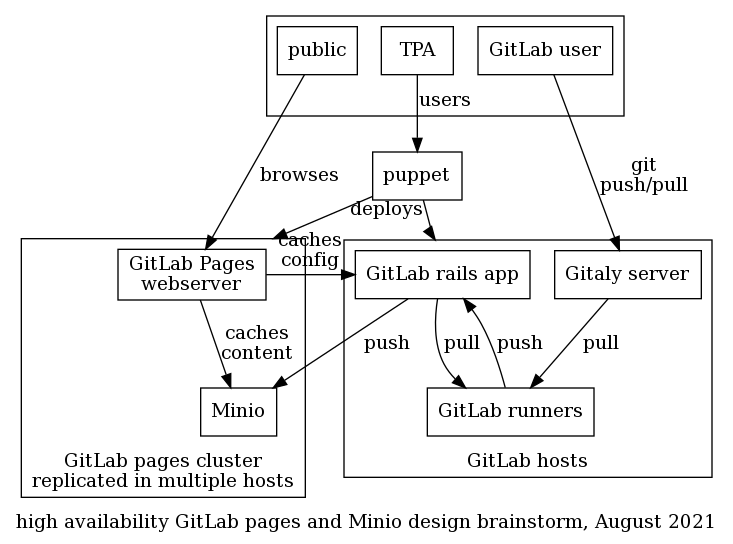

GitLab pages and Minio replacement

The above approach doesn't scale easily: the old GitLab pages implementation relied on NFS to share files between the main server and the GitLab pages server, so it was hard to deploy and scale.

The newer implementation relies on "object storage" (ie. S3) for content, and pings the main GitLab rails app for configuration.

In this comment of the related architecture update, it was acknowledged that "the transition from NFS to API seems like something that eventually will reduce the availability of Pages" but:

it is not that simple because how Pages discovers configuration has impact on availability too. In environments operating in a high scale, NFS is actually a bottleneck, something that reduces the overall availability, and this is certainly true at GitLab. Moving to API allows us to simplify Pages <-> GitLab communication and optimize it beyond what would be possible with modeling communication using NFS.

[...] But requests to GitLab API are also cached so GitLab Pages can survive a short outage of GitLab API. Cache expiration policy is currently hard-coded in the codebase, but once we address issue #281 we might be able to make it configurable for users running their GitLab on-premises too. This can help with reducing the dependency on the GitLab API.

Object storage itself (typically implemented with minio) is itself scalable and highly available, including Active-Active replicas. Object storage could also be used for other artifacts like Docker images, packages, and so on.

That design would take an approach similar to the above, but possibly discarding the cache system in favor of GitLab pages as caching frontends. In that sense:

- the mirror hosts could be replaced by the GitLab pages and Minio

- the source hosts could be replaced by some parts of the GitLab Pages system. unfortunately, that system relies on a custom webserver, but it might be possible to bypass that and directly access the on-disk files provided by the CI.

- there would be no master intermediate service

The architecture would look something like this:

This would deprecate the entire static-component architecture, which would eventually be completely retired.

The next step is to figure out a plan for this. We could start by testing custom domains (see tpo/tpa/team#42197 for that request) in a limited way, to see how it behaves and if we're liking it. We would need to see how it interacts with torproject.org domains and there's automation we could do there. We would also need to scale GitLab first (tpo/tpa/team#40479) and possibly wait for the "webserver/website" stages of the Tails merge (TPA-RFC-73) before moving ahead.

This could look something like this:

- merge websites/web servers with Tails (tpo/tpa/team#41947)

- make an inventory of all static components and evaluate how they could migrate to GitLab pages

- limited custom domains tests (tpo/tpa/team#42197)

- figure out how to create/manage torproject.org custom domains

- scale gitlab (tpo/tpa/team#40479)

- scale gitlab pages for HA across multiple points of presence

- migrate test sites (e.g. status.tpo)

- migrate prod sites progressively

- retire static-components system

This implies a migration of all static sites into GitLab CI, by the

way. Many sites are currently hand-crafted through shell commands, so

that would need collaboration between multiple teams. dist.tpo might

be particularly challenging, but has been due for a refactoring for a

while anyways.

Note that the above roadmap is just a temporary idea written in June 2025 by anarcat. A version of that is being worked on in the tails website merge issue for 2026.

Replacing Jenkins with GitLab CI as a builder

NOTE: See also the Jenkins documentation and ticket 40364 for more information on the discussion on the different options that were considered on that front.

We have settled for the "SSH shim" design, which is documented in the static-shim page.

This is the original architecture design as it was before the migration: